All Activity

- Earlier

-

OSD is looking for an old deleted boot image

anyweb replied to JayL's topic in Configuration Manager 2012

@JayL can you share the details of the fix with others so that they can get help ? -

OSD is looking for an old deleted boot image

Abnrangerx67 replied to JayL's topic in Configuration Manager 2012

Why do people do that? Post a question asking for help, then when it gets resolved from a solution not provided here, they don't share the fix. -

Prerequsites for SCCM 2012 R2 and SCCM 1606

anyweb replied to charris211's topic in Configuration Manager 2012

check your pm. -

Introduction Windows 365 User Experience Sync (UES) is a cloud‑native capability designed to give users a seamless, consistent, and personalised Windows experience across Windows 365 Cloud PCs and Windows 365 Cloud Apps. Without UES in place on Frontline Shared devices, Windows personalisation, user settings, application settings, and application data are retained on logoff/logon which can be frustrating for end users who need to reauthenticate or reconfigure to set up their device how they need to use it, only to have to do this each time they log on to the Cloud PC. Instead of relying on traditional profile‑management tools, UES automatically preserves the settings every time the user signs in. Microsoft manages the UES infrastructure, vastly decreasing the implementation complexity and cost compared to other profile based solutions. At the core of User Experience Sync is dedicated, cloud‑hosted user storage that follows each user from session to session. When a user signs in, their individual storage is dynamically attached, providing immediate access to their personal settings and app data. As the user signs out, the profile is detached and stored securely ready for the next session. In this blog post myself and fellow MVP buddy Niall Brady overview this new feature and show you how you can set this up for Windows 365 Frontline Shared Cloud PCs and Cloud Apps. Myself and Niall were part of the private preview for the solution and were heavily involved at that stage. The feature was made generally available in November and we will be interested to see that changes have been made to the solution, based on our feedback, in that time. Requirements The following requirements are needed at present. Windows 365 Frontline license Access to required Windows 365 and Microsoft 365 endpoints Intune management permissions Setting it up When it comes to setting up the Windows 365 User Experience Sync, the enablement of the feature is achieved in the configuration of the Frontline Shared Provisioning Policy. This can be done when creating a new policy, or an existing policy can be modified to add or remove the feature. Let’s focus initially on creating a policy and enabling the UES solution. In the Intune admin center, navigate to Devices | Device onboarding | Windows 365. Select Provisioning policies from the menu and choose Create Policy. When creating the provisioning policy, ensure that you select Frontline as the License type and enable Shared from the Frontline type choice. For Experience, you can choose either Access a full Cloud PC desktop, or Access only apps which run in the cloud, since both Cloud PCs and Cloud Apps support UES. The Configuration section of the provisioning policy wizard is where we configure the UES solution. We have two choices to make; Enable user experience sync and User Storage Size. Check Enable user experience sync to turn on the feature. Managing the UES storage For the User Storage Size, Microsoft offer a predefined pool of user storage that comes included with your Frontline licence. The total available storage is determined by the Cloud PC’s OS disk size and is scaled based on the number of Cloud PCs assigned within the policy. For our Frontline model, we have a Cloud PC Frontline 2vCPU/8GB/128GB licence and 1 Frontline device, therefore the amount of pooled storage available to us is 128 x 1 or 128GB. The User Storage Size allows us to assign up to 64GB per user as you can see from the drop-down menu below. Be aware of the pooled disk space limitations when assigning the the User Storage Size. Exceeded limits: When pooled storage runs out, new users can still sign in, but they receive a temporary profile and cannot create their own user storage. Users who already have allocated storage can continue signing in with their full personalised experience. Exceeded tolerance period: If the pooled storage limit remains exceeded, a 7‑day tolerance window begins. After this period ends, the system automatically removes individual user storage starting with the one that has the oldest “last attach” timestamp. Once the total storage usage drops back below the limit, the tolerance period resets and won’t start again until storage is exceeded in the future. You can manage the user storage by selecting going to Provisioning policies and clicking your newly created policy. Next, select User storage and you will be presented with a view of the Storage information which includes the Total, Available and Used data. Since we are currently provisioning the devices, nothing will be used. Some recommendations to assist with UES are to: Enable OneDrive redirection Manage Edge for cache and temporary data retention Deploy Storage Sense to clean up temporary files, delete downloads and cloud backed files (OneDrive) You can set up alert monitoring for the user storage. This is achieved by navigating to Tenant administration | Alerts | Alert Rules and clicking Frontline Cloud PC User Experience Sync Storage Limits. In the System rule view, you can set to alert when used space is Greater then or equal to a certain percentage. You can set a Severity for the alert, if the Status of the rule is On or Off, whether to have a Portal pop-up Notification and if someone should receive an Email alert. You can enter multiple recipients for the email. What’s Included and Exclude from User Experience Sync (UES) The following is included in the UES solution: User storage includes all data from C:\Users%username%, such as: User settings and application data Registry files, (NTUSER.dat and USRCLASS.dat) Personal files and folders What’s not included: Nonroamable application data AppData\Local\Packages*\AC AppData\Local\Packages*\SystemAppData AppData\Local\Packages*\LocalCache AppData\Local\Packages*\TempState AppData\Local\Packages*\AppDat Nonroamable identity data AppData\Local\Packages\Microsoft.AAD.BrokerPlugin_cw5n1h2txyewy AppData\Local\Packages\Microsoft.Windows.CloudExperienceHost_cw5n1h2txyewy AppData\Local\Microsoft\TokenBroker AppData\Local\Microsoft\OneAuth AppData\Local\Microsoft\IdentityCache The UES Experience When a user signs on for the first time to a Cloud PC or Cloud App with UES enabled, the personal storage will be created and attached to the session and capture of settings will be automatically enabled and redirected to the storage. On the next login, the UES will be reattached and any saved configuration loaded. Do bear in mind that UES does not roam or persist user installed applications, only settings and preferences are captured. On a Frontline Shared UES enabled device, we are loading the store app Sticky Notes for the first time. We are requested to authenticate to use the app on first run. After authenticating, we can create content in the app with the expectation that this is stored within the UES storage and retained for our next session. If we do not have Frontline Shared UES enabled, then the end user would need to re-authenticate with the Sticky Notes app on each logon to bring up their personalised notes. Installing apps Another thing to note is if the user downloads and installs an app, let’s say Firefox. While this works during the session that the user is logged on to, once they logoff/logon any remnant of that (shortcut, etc) are gone. If you want apps to persist then install them in the base image (custom image). Filling up the storage and reporting on usage One of the areas we fed back on during the private preview, was the lack of warning or information for the end user when the UES becomes full. In the screenshot below, for example, the user has downloaded too many ISO files and filled up their allocation of 16GB. The only message the end user gets is Couldn’t download – Disk full. This isn’t informative enough for the end user in our opinion. Improvements to the experience should be forthcoming and we hope that this is one which is being taken into account by Microsoft. When it comes to usage, the admin can take a look at what allocation of their Total amount is in use in the User storage section of the Provisioning Policy. Remember from earlier, you need to click Provisioning policies and select your policy. Select User storage to view. We can now see that 16GB from the Total of 128GB has been allocated to a user. These stats do not give us a real time view of what a user has actually used from that 16GB allocation. This is another area that we fed back on that we feel needs improvement. If we have set up alerts and total usage across all UES compromises the percentage stated in the rule, then the recipient of the alert rule will receive an email with details of the provisioning policy where the usage is a concern. In Tenant administration | Alerts admins will be alerted with the relevant Severity and with a pop-up, if enabled. Amending an Existing Provisioning Policy To be able to amend an existing provisioning policy to add or remove UES, you first need to remove the the current group assignment from the policy. Navigate back to Provisioning policies and select your Frontline shared policy. Choose Properties. Scroll down to the Assignments and click Edit. Delete the assignment. Click Remove to confirm the removal of the assignment. Click through the wizard to Update the policy. Now Edit the Configuration section of the policy. This is our UES policy with UES enabled. We can, therefore, remove UES by deselecting the Enable user experience sync checkbox. If this was a policy which never utilised UES, we could go into here and select to use and set a corresponding User Storage Size. Once amended, Update the policy and head back to Assignments and Edit. Reassign the policy and enter the relevant Cloud PC size, select your Frontline subscription and Assignment details. The Frontline shared devices will then be in a Provisioning state as the amended configuration is laid down. Read more User Experience Sync for Windows 365 Frontline in shared mode – https://learn.microsoft.com/en-us/windows-365/enterprise/frontline-user-experience-sync Troubleshoot User Experience Sync for Windows 365 Frontline in shared mode – https://learn.microsoft.com/en-us/troubleshoot/windows-365/troubleshoot-user-experience-sync Summary Windows 365 User Experience Sync is a welcome feature for Frontline Shared devices which has been on the wish list for some time now. It is very simple to get up and running, without all the complexity of other solutions and provides instant access to storage of settings and application data. Microsoft is taking into the account the feedback it received during the private preview and we should see some feature changes happening very shortly to improve the end user experience. We look forward to seeing how this new feature matures and develops over time and we’ll blog about the feature improvements as they are released.

-

Prerequsites for SCCM 2012 R2 and SCCM 1606

Something666 replied to charris211's topic in Configuration Manager 2012

Hello, I've the same problem, i'm unable to download prerequists from the setupdl.exe from ISO disc. it's possible to share a link with me, i see you have prerequest for SCCM2012 RTM/SP1/SP2/R2 ? Thank you in advance, for return. Regards. -

can you show a screenshot of your task sequence please, and secondly, share your smsts.log

-

Chris Payne started following Domain filtering

-

Hello all, I hope everyone's new year is going well for them. In our environment we have one parent domain and two child domains. For example we have test.com, alpha.test.com and beta.test.com. We have separate defined prefixes for each domain and we have separate task sequences for each domain. I am trying to combine the task sequences into one. We have OSDCOMPUTERNAME set as a task sequence variable on the unknown computer collection so we have to name the computer at the start of the task sequence. For example a group called Join Test Domain, Join Alpha Domain, and Join Beta domain. Under each of the groups is the appropriate Apply Network Settings to get it to join the selected domain. I have tried using both WMI query and task sequence variables to filter the computers to the correct domain based off their prefix or their name. For the WMI query I have used "Select * from Win32_ComputerSystem where Name like "A%" and for task sequence variable I have used OSDCOMPUTERNAME Like "A*", neither of these two methods have worked because even thou we named the computer at the start of the TS the computer names are still showing in the log as MININT-XXXX. Any suggestions on how I would need to call on the OSDCOMPUTERNAME that we used at the beginning of TS? At the start of the TS I have tried to set the task sequence variable to OSDCOMPUTERNAME just like we have on the the Unknown computer collection but it is not prompting me to enter a computer name. I am grateful for any any help or advise, thanks.

-

Introduction I started my day as always and launched the Windows app, I clicked my Cloud PC, entered my credentials and after a short delay I got an error shown below. The error shown above was “something went wrong” with a very not useful error code, -895352830. I tried this on multiple tenants with the same error code being generated. I asked Copilot what this meant and got the following details This code corresponds to an AADSTS65002 token/permission issue during authentication. Microsoft documentation shows the same error pattern: Error: -895352830 (0xCAA20002) — occurs when a Microsoft first‑party app is missing required preauthorized API consent, causing token broker authentication to fail. [learn.microsoft.com] This means the Windows App cannot obtain a valid authentication token due to a Microsoft Entra (Azure AD) app permission issue, often triggered by: Broken SSO token broker A corrupted local identity cache A bad Windows Update interfering with Windows App authentication The last point above (a bad Windows Update) is indeed the cause. More details below: January 2026 security patches (notably KB5074109) are currently breaking logins in the Windows App and Remote Desktop authentication: Microsoft confirms widespread credential failures after the January 2026 update, impacting Windows App sign-ins for Azure Virtual Desktop & Windows 365. [theregister.com] Users report the app immediately fails with authentication errors after pressing Connect. [theregister.com] Removing KB5074109 restores normal Windows App login functionality (confirmed by multiple users on Jan 15–16, 2026). [learn.microsoft.com] According to theregister.com The upshot is that connecting to Windows 365 or Azure Virtual Desktop from the Windows App could be borked due to credential problems. Microsoft posted: “Investigation and debugging are ongoing, with coordination between Azure Virtual Desktop and Windows Update teams.” The problem is widespread and appears to affect every supported version of Windows, from Windows 10 Enterprise LTSC 2016, right up to Windows 11 25H2. Windows Servers 2019 to 2025 are also affected. Great, so now what ? Suggested workarounds are that you can connect to your Cloud PC using the soon to be unsupported Remote Desktop Client Agent available from here. Windows 64-bit Windows 32-bit Windows ARM64 I tried it, but it too failed to connect. Next, I tried via a web-browser, but that also didn’t work. The Cloud PC I’m trying to connect to is protected using the new CKIO feature and the web browser version does not support that. Verifying the problem A quick look in Intune, revealed that indeed the problematic CU was indeed applied to my Cloud PC’s and the PC I was making the connection from (Windows 11 25H2 ARMx64). Here’s a sample. As you can see below Below you can see the CU details… 10.0.26200.7623 10.0.26100.7623 https://support.microsoft.com/en-us/topic/january-13-2026-kb5074109-os-builds-26200-7623-and-26100-7623-3ec427dd-6fc4-4c32-a471-83504dd081cb and within that you can see the known issues including the suggested workaround, which is to install KB5077744. As it’s so new this update is not even available in Windows Autopatch so you cannot currently use expedite updates policy to deploy this. KB5077744 is an Out‑of‑Band (OOB) update for Windows 11 25H2/24H2. It is not delivered through Windows Update automatically and must be manually downloaded and installed from the Microsoft Update Catalog. You must use the Microsoft Update Catalog to obtain the standalone package (MSU). [support.mi…rosoft.com] The fix As KB5077744 is only currently available (at the time of writing, 2026/01/19) via Microsoft’s Catalog, you must deploy it (the msu) from Intune by wrapping it as a Win32 app. Because Intune does not directly deploy .MSU updates as updates, the supported method is to wrap the MSU as a Win32 app and deploy it to your affected clients. Go to Microsoft Update Catalog and search for kb5077744. Download the update that is applicable to your Windows OS version. Be aware that the file size is big, my download was approx 3.6GB. Place the downloaded msu file and this Powershell script in a folder called KB5077744. here’s the contents of the Powershell script, modify it so that it has the exact file name of the msu file you downloaded otherwise it won’t install. Save the file as Install_KB5077744.ps1. wusa.exe windows11.0-kb5077744-x64_fb63f62e4846b81b064c3515d7aff46c9d6d50c8.msu /quiet /forcerestart exit $LASTEXITCODE Note: If you want to control reboot notifications and other options, package this instead using Powershell Application Deployment Toolkit and customize those options as necessary. Using Intunewinapputil.exe wrap the package as a Win32 app. Then add the Win32 app use this command line powershell.exe -ExecutionPolicy Bypass -File Install_KB5077744.ps1 and the following detection script. $sysinfo = systeminfo.exe $result = $sysinfo -match "KB5077744" if ($result) { Write-Output "Found KB5077744" exit 0 } else { Write-Output "KB5077744 not found" exit 1 } Finally, after deploying the update to your affected clients, and waiting for it to install and restart, you’ll be able to connect again. Phew. What a relief. The new Windows build number is highlighted below. 10.0.26200.7627 from a Windows 11 25H2 Cloud PC. and here you can see it successfully installed on the client and of course, via Intune, however Intune still has not yet updated the build number of the CPC yet in the Win32 app install status. Update It seems you need to patch the host PC making the connection to the Cloud PC also, if it’s running x64 Windows and has the .7623 patch level (January update). Strangely I didn’t need to patch the ARM PC at all even though it had the .7623 patch level. Related reading Reddit: https://www.reddit.com/r/AzureVirtualDesktop/comments/1qc3g1x/comment/nzmy4fv/ theregister: https://www.theregister.com/2026/01/15/windows_app_credential_failures/ KB5077744 – https://support.microsoft.com/en-us/topic/january-17-2026-kb5077744-os-builds-26200-7627-and-26100-7627-out-of-band-27015658-9686-4467-ab5f-d713b617e3e4#id0ejbd=catalog

-

Random Issue Plaguing OSD Deployments: Exit Code 16389

anyweb replied to BzowK's topic in Configuration Manager 2012

based on what a previous guy said " I guess you could create a powershell script to check for msiexec process doing this and if so 'wait' until it's done, before installing the app, have you tried that ? here's some untested code that you could use as a wrapper for each app, maybe it will help [CmdletBinding()] param( [int]$TimeoutSeconds = 0, # 0 = wait indefinitely [int]$PollIntervalSeconds = 3, # how often to poll [switch]$Quiet # suppress non-verbose status messages ) function Write-Info { param([string]$Message) if (-not $Quiet) { Write-Host $Message } } function Test-MsiInProgressRegistry { # Windows Installer sets this key while an MSI is actively installing $keyPath = 'HKLM:\SOFTWARE\Microsoft\Windows\CurrentVersion\Installer\InProgress' try { if (Test-Path $keyPath) { # Some builds create the key with values; treat existence as "busy" $item = Get-Item $keyPath -ErrorAction SilentlyContinue $props = $null try { $props = Get-ItemProperty $keyPath -ErrorAction SilentlyContinue } catch {} return $true } } catch {} return $false } function Get-MsiexecProcesses { # Fetch msiexec.exe along with command lines (CIM needed for CommandLine) try { return Get-CimInstance Win32_Process -Filter "Name='msiexec.exe'" -ErrorAction SilentlyContinue } catch { # Fallback to Get-Process (no command line available) try { return (Get-Process -Name msiexec -ErrorAction SilentlyContinue | ForEach-Object { [pscustomobject]@{ ProcessId = $_.Id Name = $_.ProcessName CommandLine = $null } }) } catch { return @() } } } function Test-MsiexecDoingWork { # Heuristic: if command line indicates an actual MSI operation (install/repair/uninstall/update) param( [Parameter(Mandatory)] $Proc ) $cl = $Proc.CommandLine if ([string]::IsNullOrWhiteSpace($cl)) { # If we lack command line, be conservative: any msiexec process could be active return $true } # Common operation switches for msiexec: # /i, /x, /f*, /update, /uninstall, /package, /qn, /qb, /passive often accompany installs $patterns = @( '\s/([ix])\b', # /i install, /x uninstall '\s/f[a-z]*\b', # /f, /fa, /fu, etc. (repair) '\s/update\b', # patch/update '\s/uninstall\b', '\s/package\b', '\.msi(\s|$)', # explicit MSI file '\.msp(\s|$)' # patch file ) foreach ($p in $patterns) { if ($cl -match $p) { return $true } } # Sometimes msiexec runs as the service side without clear switches; if in doubt, treat as busy return $true } function Test-MsiBusy { # Returns $true if Windows Installer is likely busy $procs = @(Get-MsiexecProcesses) if ($procs.Count -gt 0) { foreach ($p in $procs) { if (Test-MsiexecDoingWork -Proc $p) { return $true } } } if (Test-MsiInProgressRegistry) { return $true } return $false } # ---------------------------- # Main wait loop # ---------------------------- $start = Get-Date if ($TimeoutSeconds -gt 0) { Write-Info "Waiting for Windows Installer (msiexec) to finish (timeout: $TimeoutSeconds s, poll: $PollIntervalSeconds s)..." } else { Write-Info "Waiting for Windows Installer (msiexec) to finish (no timeout, poll: $PollIntervalSeconds s)..." } # If already idle, return immediately if (-not (Test-MsiBusy)) { Write-Info "Windows Installer appears idle." return } # Otherwise, poll until idle or timeout while (Test-MsiBusy) { if ($TimeoutSeconds -gt 0) { $elapsed = (New-TimeSpan -Start $start -End (Get-Date)).TotalSeconds if ($elapsed -ge $TimeoutSeconds) { Write-Warning "Timed out waiting for Windows Installer after $([int]$elapsed) seconds." exit 1 } if (-not $Quiet) { $remaining = [math]::Max([int]($TimeoutSeconds - $elapsed), 0) Write-Host ("MSI busy... ({0}s remaining). Next check in {1}s." -f $remaining, $PollIntervalSeconds) } } else { if (-not $Quiet) { Write-Host "MSI busy... next check in $PollIntervalSeconds s." } } Start-Sleep -Seconds $PollIntervalSeconds } Write-Info "Windows Installer is now idle." -

Random Issue Plaguing OSD Deployments: Exit Code 16389

khluz replied to BzowK's topic in Configuration Manager 2012

Hi all Meanwhile it's 2026 and we also have this issue on any task sequence on any application to install randomly. SCCM Version Current Branch 2503. Not the newest, but not old. Sometimes we can solve the problem by delete the application from the task sequence and add it again. Sometimes it helps to update the distribution point with the failed application. Random. Does anybody have a real solution or an official statemant from microsoft? tx to any help sincerly CL -

Prerequsites for SCCM 2012 R2 and SCCM 1606

anyweb replied to charris211's topic in Configuration Manager 2012

it's no longer supported so i doubt it, i'll share a link with you -

Prerequsites for SCCM 2012 R2 and SCCM 1606

charris211 replied to charris211's topic in Configuration Manager 2012

Are the prerequisites for SCCM 1702 still downloadable from Microsoft? My hard drive was erased and I no longer have the prerequisites for that version. -

Introduction While Windows 365 Cloud PCs deploy robust perimeter defences—encrypted transmission channels and multi-factor authentication that thwart network-level attacks—a critical vulnerability persists at the endpoint itself. Malicious software operating on local devices, particularly keystroke capture tools, can intercept sensitive information before it ever reaches the cloud environment. These endpoint-resident threats create regulatory exposure and potential financial damage that traditional cloud security measures cannot address. Windows Cloud IO Protection closes this gap by introducing a kernel-level driver and system-level encryption that sends keystrokes directly to the Cloud PC, bypassing OS layers vulnerable to malware. When enabled on a Cloud PC it enforces a strict trust model: Only verified physical endpoints can connect. Endpoints must have the Windows Cloud IO Protect MSI installed. If the MSI is missing, the session is blocked and an error is displayed. This ensures a secure, uncompromised input channel between the Windows client and the Cloud PC. In this blog post myself and fellow MVP buddy Paul Winstanley overview this new feature and show you how you can set this up for Windows 365 Cloud PCs. Requirements The following requirements are needed at present. The feature is public preview and could change as this becomes Generally Available: Host physical Windows 11 devices Devices must have TPM 2.0 Host devices must have the Windows Cloud IO Protect agent installed Download the Windows x64 release Download the Windows ARM 64 release Windows App running 2.0.704.0 or later The following is not currently supported: macOS iOS Android Windows 365 Link Using the Web client Setting it up When it comes to setting up the Windows Cloud Keyboard Input Protection feature, Microsoft has an interesting approach in that its documentation currently only supports using group policy to achieve this. The setting Windows Components > Remote Desktop Services > Remote Desktop Session Host > Azure Virtual Desktop > Enable Keyboard Input Protection needs to be enabled. During our testing phase, we were fortunate enough to be part of the private preview release, we had to set the following registry keys for Windows 365 devices, with just the former key being required for AVD devices: HKEY_LOCAL_MACHINE\SOFTWARE\Policies\Microsoft\Windows NT\Terminal Services\ DWORD - fWCIOKeyboardInputProtection Value - 1 HKLM\SYSTEM\CurrentControlSet\Control\Terminal Server\RdpCloudStackSettings DWORD - SecureInputProtection.KeyboardProtection.IsEnabled Value - 1 To assist with this on your Entra only Cloud PCs, we have created an Intune proactive remediation script to set the values, if they are not present on your Windows 365 devices. These scripts need to be targeted to the Cloud PCs, and not the host devices. You can download the detection and remediation scripts from GitHub. Adding the Proactive Remediation script for Keyboard Protection In the Intune admin center, navigate to Devices | By platform | Windows | Manage devices | Scripts and remediations. Ensure you are in the Remediation view and click Create. Enter a Name for the custom script and add an optional Description. Click Next when done. Click the browse icon for the Detection script file and upload the Detect-KeyboardProtection.ps1 downloaded from GitHub. Repeat the process for the Remediation script file by uploading the Remediate-KeyboardProtection.ps1 script. Leave all other options as default and click Next. At the Assignments section, choose the group of devices that you wish to targeted. You can adjust the schedule for the execution of the remediation by clicking the Daily link under Schedule. For example, for our testing we have set the remediation to run Hourly. Click Apply when you have made your adjustment. The new schedule will be reflected accordingly in the Intune console. When you have completed the proactive remediation wizard, you will see the new remediation listed and with a Status of Active. When the script has executed against devices, the Detection status will be reported back to Intune with statuses of Without issues, With issues, Failed or Not applicable. Any Remediation status data will also be reported where applicable. Installing the Windows Cloud Input Protect MSI Agent Referring back to the Microsoft documentation, the MSI install is documented using a manual method of installation the application, and this would require local admin rights to achieve this. Instead, we can push the Windows Cloud Input Protect MSI via Microsoft Intune to an Intune managed host device. We can configure the application as a Win32 app and, therefore, we need to use the Microsoft Win32 Content Prep Tool, which you download from https://github.com/microsoft/Microsoft-Win32-Content-Prep-Tool, to convert the app into the .intunewin format. Here is a blog written back in 2019 on how to use the Content Prep Tool https://sccmentor.com/2019/02/17/keep-it-simple-with-intune-4-deploying-a-win32-app/ We can package the MSI into the required .intunewin format with three simple switches: -c – this points the tool to the source folder containing the binaries -s – this points the tool to the setup file used to execute the application -o – this tells the tool where to output the .intunewin to Once the tool is run, it quickly creates the .intunewin file for the Windows Cloud Input Protect MSI. We can now take this file and import it into Intune when we create the Win32 app. Navigate to Apps | Platform | Windows, in the Intune admin center. Click Create. From the App type drop-down, select Windows app (Win 32) and then choose Select. You will now be navigating through the Add App wizard. Start by clicking Select app package file. Click the browse icon and locate the .intunewin file that you created. Click OK to upload the file. Details will be automatically added to the App information fields. The only mandatory field you will need to complete is the Publisher field. Feel free to add any further information you require. Click Next when done. In the Program section of the wizard, further details will be automatically added, such as Install command and Uninstall command. We will use these default values for our application. Click Next to continue. In the Requirements section, you can be selective on the specific requirements for the installation of the app. We have ensured that Yes. Specify the systems the app can be installed on is selected and we have chosen Install on x64 systems as we downloaded the x64 release of the MSI. You must also select a Minimum operating system level for the install. Click Next when you have completed this section. For the Detection rules, we have kept this simple and chosen Manually configure detection rules from the Rules format drop-down and chosen the MSI type, which automatically uses the MSI ID for the application. Finally, a reminder that when targeting the Windows Cloud Input Protect MSI agent, we are targeting Windows 365 host devices and not the Cloud PCs themselves. When the Windows Cloud Input Protect MSI agent installs, you will see an entry for the Windows Cloud IO Protection driver in Program and Features on your host device. and, hopefully, this is reflected in the Intune admin center in the apps Overview section. Connecting from a host device which is not running the Windows Cloud Input Protect MSI agent At present, there is no visual indication to let you know if the Cloud PC is running with keyboard protection enabled, and this was one of the feed back areas in the private preview. It is coming and we should expect something fairly soon. When you attempt to connect from a device which is not running the agent, you will get the following error dialog box. and Windows App will report similar. We expect to see something similar, like the AI-enabled heading on a device which has the AI features enabled. Fingers crossed that this arrives soon. Connecting from a non supported device platform can produce different error messages that are less intuitive. For example, the screenshot below shows the error when connecting from the Windows App on an Android device. Hopefully, support will be extended to other device platforms over time, and error messages updated to reflect the connection issue. Read more Keyboard Input Protection for Windows 365 and Azure Virtual Desktop now in preview – https://techcommunity.microsoft.com/blog/windows-itpro-blog/keyboard-input-protection-for-windows-365-and-azure-virtual-desktop-now-in-previ/4468102 Windows Cloud IO Protection – https://learn.microsoft.com/en-us/windows-365/enterprise/windows-cloud-input-protection Summary Windows Cloud IO Protection is a powerful security feature that ensures keystrokes are encrypted and sent directly to the Cloud PC, bypassing OS layers vulnerable to malware. By requiring trusted endpoints with the protection agent installed, it creates a secure, uncompromised input channel that blocks keyloggers and prevents data leaks. Microsoft is taking into the account the feedback it received during the private preview and we should see some feature changes happening very shortly to improve the end user experience. Beyond this, we hope that support is adopted for all device platforms, so that this security feature becomes OS agnostic. We look forward to seeing how this new feature matures and develops before being released to production.

-

Introduction Microsoft have invested a lot of time, effort and money on adding AI abilities to core parts of the Windows Operating System and the apps that run on it, for example Office 365 apps or dedicated AI apps such as Microsoft 365 Copilot. Myself and my good friend Paul Winstanley took a look at a new feature to add select AI features to Windows 365 Cloud PC’s and share our thoughts below. Windows 365 AI-enabled Cloud PCs run in Microsoft’s Cloud and stream AI-powered Windows to any device and platform. This allows you to use select AI features that would traditionally be found on physical NPU powered Copilot+ PC’s. Those select AI features are: Improved Windows search Click to Do You can test these AI features on any device using your Cloud PC for example, below you can see an AI-enabled Cloud PC available from an Android powered phone. Note: In the screenshots below from our Android phones, the AI-enabled Cloud PC has an AI-enabled label in the top left corner. But first, let’s take a look at what you need in order to set this up. Requirements The requirements below will most likely change as this becomes Generally Available, but to test it right now in this Frontier release you’ll need to do the following: The end user must be registered in the Windows Insider program The Cloud PC must be in the Windows Insider Beta channel Enable Data diagnostics in your settings Have a Cloud PC that supports AI abilities (8vCPU, 32GB ram, 256GB HDD) The Cloud PC must be in a supported location Setting it up To set it up make sure you’ve enabled data diagnostics in your Windows Privacy settings, as shown here. Set the Send optional diagnostics data to On. Next, enroll into Windows Insider Program and select the Beta Channel (Recommended). Once done you’ll most likely have to restart the CPC, so go ahead and do that. Next, you’ll need to configure a policy (targeted to users) which enables the feature for Cloud PC’s that meet the minimum requirements. To do that select the Windows 365 node in Intune, click Settings and select Create to create the policy. Select Cloud PC configurations (preview) from the options presented. In the Configuration settings part of the wizard, select Enable from the drop down menu to enable AI features. and on the Assignments screen, select a previously created Entra Id group with users that will be using this new ability. Once done, make sure one or more users are in that group and the policy should flow to the CPC’s. Exploring the new AI abilities The first thing you’ll most likely notice is that your AI Enabled Cloud PC’s are now clearly labelled with an AI-enabled label in the Windows app. After connecting to that Cloud PC, you’ll notice the search icon looks different. AI-enabled Cloud PCs are marked by a magnifying glass with a sparkle icon within the search box on the taskbar. Those of you who already have physical Copilot + PC’s will already be familiar with this new search icon. The new AI search abilities are supposed to be better than built-in traditional search, so we put it to the test. We decided to start with by simply searching for a word via the search feature in Windows File Explorer on the users OneDrive. We performed the same search on the AI-enabled CPC as well as on a regular non-AI powered physical device. The AI search results are on the left, and the traditional search results on the right. Look at the difference, 37 hits versus 10, not bad at all. Lets try another search. This time again, more results from the AI search versus traditional search. Click to do Microsoft Click to do is a feature on all Copilot+ PC’s and Windows 365 AI-enabled Cloud PC’s. To use it simply search for it in the start menu and click the app. Once started, you can browse websites with pictures of text (for example) and press the Windows key + mouse click anywhere in the website. This can give you options to do things with what it sees, such as copy text within an image to paste into your favorite text editor, Depending on what you click on you get more or less options, such as below when clicking on the image of a blue CRT. Cool! Changes in Intune When using Windows 365 AI-enabled Cloud PC’s there are some additional things that you can see in the Intune portal to reveal the finer details. There’s a report here to show AI-enabled CPC’s and what (if anything) is wrong with them. To find it select Reports, and select Cloud PC overview. Select the AI-enabled Cloud PC report, and you can then click through the Status column for any Cloud PC that has reported data up to Intune. Once you’ve fixed the issues reported, which in our case we provisioned the Cloud PC in a location that was not yet supported for AI-enabled abilities, you’ll see a new status of Ready to use. You can also see a new status in Cloud PC’s overview, called AI-enabled, which will report Yes or No. Read more AI-enabled Cloud PCs – https://learn.microsoft.com/en-us/windows-365/enterprise/ai-enabled-cloud-pcs Experience next-gen productivity with Windows 365 AI-enabled Cloud PCs – https://techcommunity.microsoft.com/blog/windows-itpro-blog/experience-next-gen-productivity-with-windows-365-ai-enabled-cloud-pcs/4467875 Experience next-gen productivity with Windows 365 AI-enabled Cloud PCs – https://youtu.be/CvL8UXCYzDk Using Microsoft Click-To-Do https://support.microsoft.com/en-us/windows/click-to-do-do-more-with-what-s-on-your-screen-6848b7d5-7fb0-4c43-b08a-443d6d3f5955 Summary This new feature via the Microsoft Frontier Program has a lot of cool potential! It’s great to see that Microsoft is continuing its investment in Windows 365 Cloud PC development, and adding key features like this brings the power of AI to all devices, whether they are Android, macOS, Windows. We look forward to seeing how this new feature matures and develops before being released to production.

-

can't you boot it into safe mode and then uninstall whatever driver/app that caused the problem ? as it's an old Windows 7 computer have you verified if the disk (hdd) is ok ??

-

Rebooted an old Dell 980 (that has been bulletproof for years) saw the windows boot image, then BLACK screen and a live (movable) cursor. Nothing I do seems to affect it. Replaced the (weak old) power supply. now it boots to a black screen faster. tried using a win 7 recovery iso and was able to get it to attempt to Restore. recent restore (few days ago) same problem. restore from 20 days ago same problem. restore for 21 days ago, Restore died with ox800700B7 error. (my noob guess is a bad driver update by Avast updater, but no way to know which one. Or even if it happened.) Thanks for listening. (I have a win10 workstation, winXP workstation, win 11 laptop, win8 media pc, and a SGI IRIX. This is the only machine pissing me off.) help please.

-

task sequence [Help] - Powershell script SMSTSPostAction

Abnrangerx67 replied to Deilson Oliveira's question in scripting

Sure this was four years ago, but I think it is selfish you posted for help, and glad you got it working, but you did not share how you got it working. I am not seeking the fix, but it would help others that may be. However, as suggested, and not sure why you opted for the post action route, it makes sense to install as an application or a package, or publish the app so based on a query or whatever means of targeting it gets installed POST OSD.- 6 replies

-

- powershell

- scripting

-

(and 1 more)

Tagged with:

-

Windows 11 DAO acces to old SQL Server 2005/2008 database

anyweb replied to cliv's question in SQL Server

can't you use Windows 10 LTSC then ? (Long Term Servicing Channel) ? -

I maintain an application that I developed over 20 years ago in vb6. The application uses DAO to access databases on two old servers running SQLServer2005 and SQLServer2008. It is installed on nearly 50 computers with operating systems ranging from WinXP to Win10 and runs smoothly. The program uses DAO and ODBC DSN-less connection strings to access data using DRIVER={SQL Server}. The last driver that worked in Windows 10 and with SQL Server 2005/2008 was sqlsrv32.dll version v.10.0.19041.6456. After PCs started updating to Windows 11, it no longer works. On Windows 11, Microsoft ships a newer sqlsrv32.dll (now v.10.0.26100.3624) that has hardened TLS/SSL behavior, which breaks connectivity to SQL 2005. I thought about manually registering the old driver alongside the new one in ODBC. What I've done so far in Win11: 1. Activate TLS 1.0 (SQL Server 2005 speak TLS 1.0 only, SQL Server 2008–2012 TLS 1.0 / 1.1 / partial 1.2) 2. The old driver after update is in "C:\Windows.old\Windows\SysWOW64\sqlsrv32.dll", so i create a new entry in registry with a new name {SQL Server (Legacy)} and that location: Windows Registry Editor Version 5.00 [HKEY_LOCAL_MACHINE\SOFTWARE\WOW6432Node\ODBC\ODBCINST.INI\SQL Server (Legacy)] "Driver"="C:\\Windows.old\\Windows\\SysWOW64\\sqlsrv32.dll" "Setup"="C:\\Windows.old\\Windows\\SysWOW64\\sqlsrv32.dll" "ResourceFile"="C:\\Windows.old\\Windows\\SysWOW64\\sqlsrv32.rll" "UsageCount"=dword:00000001 "DriverODBCVer"="02.50" "APILevel"="2" "ConnectFunctions"="YYY" "SQLLevel"="1" "FileUsage"="0" "CPTimeout"="60" [HKEY_LOCAL_MACHINE\SOFTWARE\WOW6432Node\ODBC\ODBCINST.INI\ODBC Drivers] "SQL Server (Legacy)"="Installed" 3.Reboot 4. Open C:\Windows\SysWOW64\odbcad32.exe -> Drivers tab -> confirm “SQL Server (Legacy)” -> appears ... but when i try to create a DSN using [SQL Server (Legacy)] ... fail. I also try the same (manual extract and register) with driver {SQL Server Native Client 11.0} sqlncli11.dll v. 11.4.7001.0, and sqlnclir11.rll in c:Windows\SysWOW64\1033\, because 32bit version cannot install using installer on Win11. Driver appear in Drivers tab -> “SQL Server Native Client 11.0” but create a DSN fot test fail also. Windows Registry Editor Version 5.00 [HKEY_LOCAL_MACHINE\SOFTWARE\WOW6432Node\ODBC\ODBCINST.INI\SQL Server Native Client 11.0] "Driver"="C:\\Windows\\SysWOW64\\sqlncli11.dll" "Setup"="C:\\Windows\\SysWOW64\\sqlncli11.dll" "ResourceFile"="C:\\Windows\\SysWOW64\\1033\\sqlncli11.rll" "DriverODBCVer"="03.80" "APILevel"="2" "ConnectFunctions"="YYY" "SQLLevel"="1" "FileUsage"="0" "CPTimeout"="60" "UsageCount"=dword:00000001 [HKEY_LOCAL_MACHINE\SOFTWARE\WOW6432Node\ODBC\ODBCINST.INI\ODBC Drivers] "SQL Server Native Client 11.0"="Installed" I cannot update SQLServer because they are used in an industrial automation project and the app has become too complex over time so also update is dificult. Any suggestion please!

-

Prerequsites for SCCM 2012 R2 and SCCM 1606

anyweb replied to charris211's topic in Configuration Manager 2012

hi Andy, drop me a message and i'll make them available to you cheers niall -

Prerequsites for SCCM 2012 R2 and SCCM 1606

andyburness replied to charris211's topic in Configuration Manager 2012

Hi all, In my home lab I'm trying to set up a test SCCM 2012 R2 instance in a VM. I'm also not able to download the prerequisites any more. Is there any chance I could please also get access to a copy of the SCCM 2012 R2 prerequisites? For some context as to why I want to use such an old version in 2025, SCCM 2012 R2 is what I have a license for, I installed it years ago but didn't archive the prerequisites at the time, and the server I used back then has long since been formatted many times, I like playing with old tech, I have some older Windows XP and Mac OS computers which I want to try to get working with SCCM 2012 R2, just to see if I can, This is going to be an internal only lab, on my home network, not used for anything productive and not exposed to the internet. -

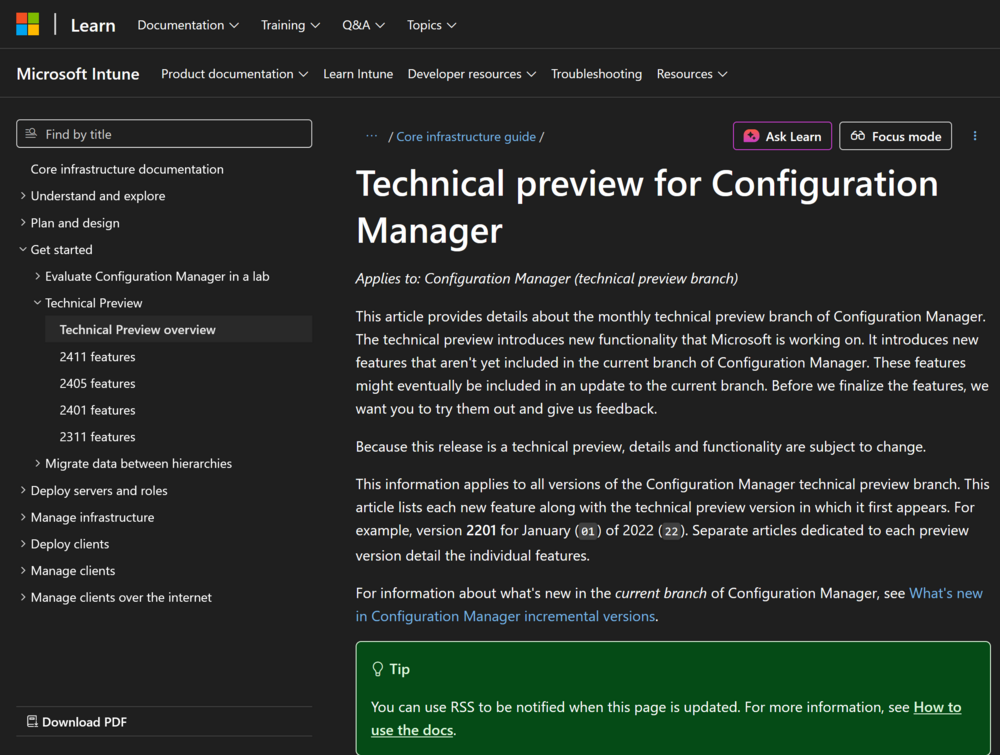

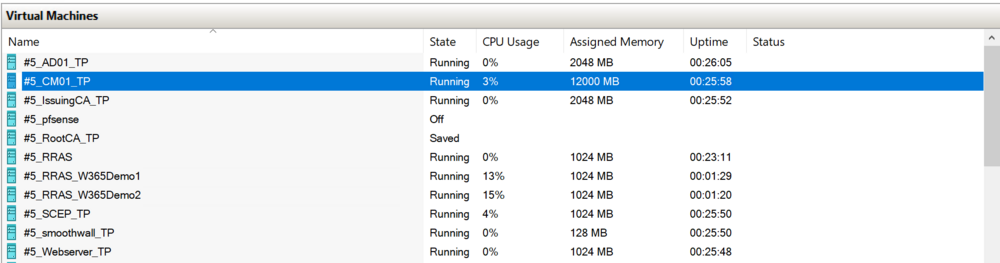

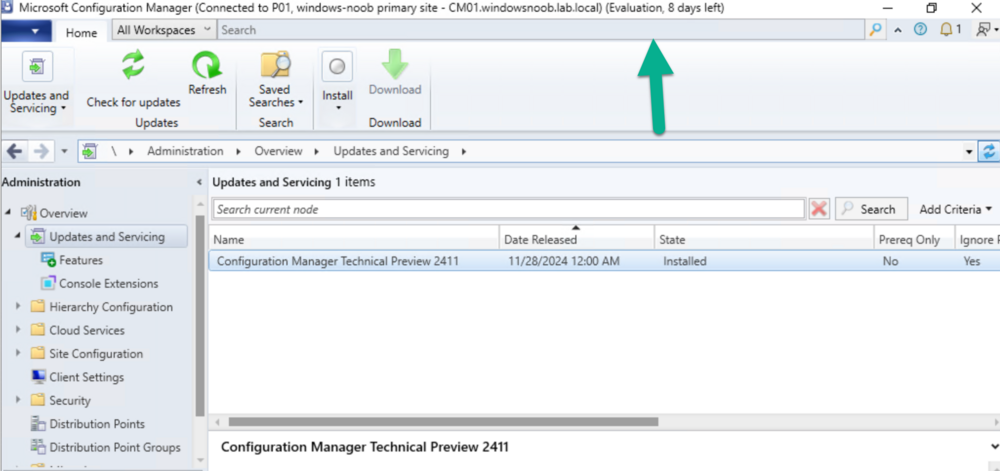

Introduction Microsoft recently released a blog post outlining predictable changes to the cadence of update releases for SCCM formally known as System Center Configuration Manager. The pinned tweet below had some interesting replies which you can read yourself here. The blog post didn’t even mention SCCM Technical Preview at all, but did TAG it as you can see here. What exactly is SCCM Technical Preview ? SCCM Technical Preview has been a test release of Configuration Manager that has been released very regularly (at first) and developed for years allowing SCCM admins to test the latest and greatest features coming to the product in advance, and allowing them to give valuable feedback to the product team, thereby shaping the mainstream product (Microsoft Configuration Manager) into what it is today. Technical Preview launched publicly in May 2015 and that journey started a decade long coding frenzy, where a large team of motivated developers led by none other than the great David James (djammmer) developed great features at an outstanding pace, at least until the last few years when things slowed down to a noticeable crawl about a year after David left in October 2021. Without the Technical Preview releases you could argue that the move to the Cloud would have taken a lot longer. Moving forward to today, the last documented release of SCCM Technical Preview was 2411 and you can still (at the time of writing) read documentation about that version here. I’ve personally tested and blogged about most of those releases over the last 10 years. Below you can see the timeline of upgrades I’ve done in my lab via the History feature within Configuration Manager. Note how it states Evaluation, 8 days left. And below are my many blog posts on the subject: Installation – How can I install System Center Configuration Manager and Endpoint Protection Technical Preview 4 Spot the difference, maintenance windows versus service windows, Microsoft is listening and fixing ! System Center Configuration Manager Technical Preview 1601 is now available ! System Center Configuration Manager Technical Preview 1602 is now available ! System Center Configuration Manager Technical Preview 1603 is now available ! System Center Configuration Manager Technical Preview 1604 is now available ! System Center Configuration Manager Technical Preview 1605 is now available ! System Center Configuration Manager Technical Preview 1606 is now available ! System Center Configuration Manager Technical Preview 1607 is now available ! System Center Configuration Manager Technical Preview 1608 is now available ! System Center Configuration Manager Technical Preview 1609 is now available ! System Center Configuration Manager Technical Preview 1610 is now available ! System Center Configuration Manager Technical Preview 1611 is now available ! System Center Configuration Manager Technical Preview 1612 is now available ! System Center Configuration Manager Technical Preview 1701 is now available ! What is the ContentLibraryCleanup tool and how can I use it ? System Center Configuration Manager Technical Preview 1702 is now available ! Video – Upgrading to System Center Configuration Manager 1703 Technical Preview System Center Configuration Manager Technical Preview 1704 is now available ! System Center Configuration Manager Technical Preview 1705 is now available ! System Center Configuration Manager Technical Preview 1706 is now available ! System Center Configuration Manager Technical Preview 1707 is now available ! System Center Configuration Manager Technical Preview 1708 is now available ! System Center Configuration Manager Technical Preview 1709 is now available ! System Center Configuration Manager Technical Preview 1710 is now available ! System Center Configuration Manager Technical Preview 1711 is now available ! System Center Configuration Manager Technical Preview 1712 is now available ! System Center Configuration Manager Technical Preview 1801 is now available ! Update 1802 for Configuration Manager Technical Preview available now Update 1803 for Configuration Manager Technical Preview available now ! System Center Configuration Manager Technical Preview 1804 released System Center Configuration Manager Technical Preview 1805 released System Center Configuration Manager Technical Preview 1806 released System Center Configuration Manager Technical Preview 1807 released System Center Configuration Manager Technical Preview 1808 released System Center Configuration Manager Technical Preview 1809 is out A quick look at System Center Configuration Manager Technical Preview version 1810 A quick look at System Center Configuration Manager Technical Preview version 1810.2 System Center Configuration Manager Technical Preview version 1901 is now available System Center Configuration Manager 1902 Technical Preview is available System Center Configuration Manager 1902.2 Technical Preview is now available System Center Configuration Manager 1903 is now available and it includes my uservoice ConfigMgr log files help you when you least expect it, oh and SCCM 1904 TP is out ! How can I use the new Community hub in SCCM Technical Preview 1904 SCCM Technical Preview version 1905 is available and this is a HUGE release ! SCCM Technical Preview version 1906 is available System Center Configuration Manager Technical Preview version 1907 is available now ! System Center Configuration Manager Technical Preview version 1908 is available now ! Make your task sequences go faster with the Run as high performance power plan How to configure keyboard layout in WinPE in SCCM How to select an index when importing an Upgrade package into SCCM System Center Configuration Manager Technical Preview version 1909 is released ! System Center Configuration Manager TP1910 is out ! Microsoft Endpoint Manager Configuration Manager technical preview version 1911 is released Microsoft Endpoint Manager Configuration Manager Technical Preview version 1912 released New BitLocker Management features in Microsoft Endpoint Manager Configuration Manager Technical Preview 2002 Microsoft Endpoint Manager Configuration Manager Technical Preview 2002.2 is out Microsoft Endpoint Manager TP 2003 is out Microsoft Endpoint Manager Technical Preview 2004 is out Microsoft Endpoint Manager Configuration Manager technical preview version 2005 is out Microsoft Endpoint Manager TP2006 is out Slides and recording from “New cloud features in Configuration Manager Technical Preview” A look at task sequence media support for cloud-based content Technical Preview 2007 is out ! Technical Preview 2007 Timeline improvements Cool new features in Technical Preview 2008 OSD via boot media and CMG, available in TP2009 New features in Configuration Manager Technical Preview 2010 Manage BitLocker policies and escrow recovery keys over a cloud management gateway (CMG) Required application deployments visible in Microsoft Endpoint Manager admin center Fixing One or more Azure AD app secrets used by Cloud Services will expire soon Improvements to BitLocker support via cloud management gateway Technical preview 2104 get BitLocker recovery keys for a tenant-attached device Creating a VMSS CMG and setting VM size with Technical Preview 2105 How to change the CMG (VMSS) size after it is deployed Use Windows Updates notifications instead of ConfigMgr client notifications in tp2105.2 Configuration Manager Technical Preview 2106 is out, and it’s huge !! Technical Preview 2108 is out ! Technical Preview 2109 is out, update your console manually Technical Preview 2201 – Tenant attach is now GA Technical Preview 2202 – delete collection references Enabling dark-mode in Configuration Manager 2203 Escrow BitLocker recovery password to the site during a task sequence in Configuration Manager 2203 Technical Preview 2204 is out, brings ADR organization, Admin service + dark mode improvements New video: First looks at Distribution point content migration in Configuration Manager 2207 First looks at Distribution point content migration Technical Preview 2208 is out, adds RBAC for tenant attached devices Technical Preview 2209 is out, adds improvements to search in the console and dark mode theme Quick tip: Free up space on your ConfigMgr server Technical Preview 2302 is out Technical Preview 2305 is out, should you upgrade ? yes A quick look at Technical Preview 2307 Technical Preview 2401 is out Technical Preview 2411 is out, upgrade now before your lab times out Who cares ? My trusty SCCM Technical Preview lab support is coming to an end, and currently there’s no sign that Microsoft will do anything about it. It has 8 days left of support and after that, if Microsoft doesn’t release an update for Technical Preview, then it will become unsupported and unusable. I’ve pinged some of the official product teams on Twitter, asking if SCCM Technical Preview is dead but so far, there is no response. If they do update or reply I’ll post it here. This IMHO is a crying shame as many dedicated SCCM admins like me have updated these labs for years, and now those very same labs are left to die by the very company that encouraged us to not only use them, but to provide ongoing feedback. My Technical Preview lab shown below, was used for things like setting up bitlocker management via Configuration Manager, Co-Management setup, Cloud Managed Gateway (CMG), hybrid management of Windows 365 Cloud PCs, tenant attach, OSD via CMG and most recently the integration of Microsoft Intune’s Cloud PKI & SCEP when I technically reviewed this great book from my friends Paul & David. But now the clock is ticking and soon I won’t be able to even use the console. Is this the end of Configuration Manager Technical Preview ? it certainly looks like it and based on this quote in their opening paragraph, I think the answer is yes. Microsoft Intune is the future of device management, and all new innovations will occur there. Update: No sooner than I posted this I got an reply on Twitter from Arnie, and here it is, confirmation from Jason Sandys @ Microsoft that Technical Preview is no more. RIP

-

cool but i found the issue comes back, please let me know how it goes for you, by the way are your users 'standard' users or local administrators ?

-

Windows app is blank, how to troubleshoot ? part 2

miles0231 replied to anyweb's topic in Windows 365

thank you for this! this fixed the issue for us as well!!

.jpg.f8eb202174aba24c9a592dfeee208785.jpg)