-

Posts

1009 -

Joined

-

Last visited

-

Days Won

26

Everything posted by Rocket Man

-

Are the ports configured on the SUP matching the ports specified on the WSUS IIS website?

-

Disabling Administrator..... creating new?

Rocket Man replied to P3nnyw1se's topic in Configuration Manager 2012

For your Local admin account you could: Create an answerfile OR use this script and create a package out of it @echo off cls echo Creating Local Account: ITadmin pushd %~dp0 echo. net user ITadmin password1 /ADD /FULLNAME:"ITadmin" /COMMENT:"Built in Local Admin Account" /ACTIVE:YES /PASSWORDCHG:NO /EXPIRES:NEVER net localgroup "Administrators" ITadmin /add wmic useraccount where "name='ITadmin'" set PasswordExpires=False popd OR you can use run commandline steps to achieve this also. If you like I will post them tomorrow if the above solutions are not suffice! OR as Peter mentioned use group policy to change the administrator account name and set the password in the task sequence. -

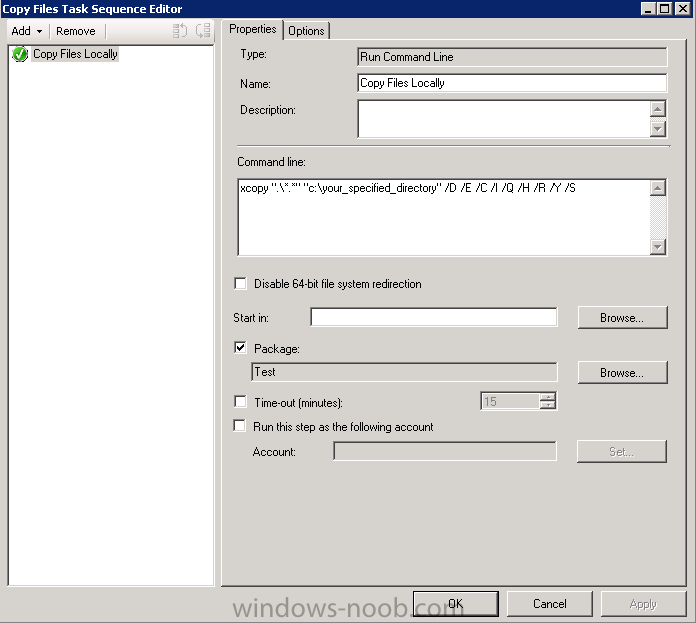

Why not use a run command line step like this and simply reference the package that contains the 2 files xcopy ".\*.*" "c:\your_specified_directory" /D /E /C /I /Q /H /R /Y /S This will work without the use of scripting! Or if you dont want to do it via a task sequence a batch file (nameofbatch.bat) like this would do the trick: copy %~dp0file1 "C:\Your_specified_directory" /y copy %~dp0file2 "C:\Your_specified_directory" /y Have the 2 files in a package along with the batch file and the program command line will be nameofbatch.bat

-

Create sysprepped VHD with SCCM 2012 R2

Rocket Man replied to goffries's topic in Configuration Manager 2012

Have you tried: C:\Windows\system32\shutdown.exe -s -t 300 as the commandline...or if shutdown.exe is located in the syswow folder on 64 bit machines change the path to suit. -

What exactly is the purpose of the batch file? Does it initiate a software install?

-

SCCM Management Point Critical Status

Rocket Man replied to i.luciano@tcnb.com's topic in Configuration Manager 2012

Good Stuff enjoy the SCCM experience!- 5 replies

-

- SCCM

- System Center 2012

- (and 6 more)

-

SCCM Management Point Critical Status

Rocket Man replied to i.luciano@tcnb.com's topic in Configuration Manager 2012

Have you seen this it points to your WMI errors in the MPmsi.log. 80041002 translates to: Not found Source: Windows Management (WMI)- 5 replies

-

- 1

-

-

- SCCM

- System Center 2012

- (and 6 more)

-

SCCM 2012 buid computer collection based on user group membership / primary user

Rocket Man replied to conco's question in Collections

Steps to take to achieve this: Right-click create new query Give Query a name Click on edit query statement When query statement property window appears select Show Query language Paste the above query in and click OK. Select Next until finish. You can then run this query by right-clicking on it and selecting the run option. If it is what you need then create a computer collection and import this query. Hope this helps! -

SCCM 2012 buid computer collection based on user group membership / primary user

Rocket Man replied to conco's question in Collections

In the monitoring window there is a query node. Select it and right-click create new query. Give the query a name to suit the appropriate user group for example. Then paste in the query from above and make sure to edit the last part i:e Domain\\UserGroup with your information. Once done you can run this query to see if this is what you are looking for. Basically it will give you the information of the system names that users within the user group specified in the query are associated with. Then to create a computer collection with this info so that you can deploy Task sequences to, all you have to do is create a new collection and import the pre-made query! -

SCCM 2012 buid computer collection based on user group membership / primary user

Rocket Man replied to conco's question in Collections

Is this what you need? This query will pull all systems that specific users from the specified user group are associated with. If it was you need you first create a new query in the monitoring...query node and then create a computer collection and import this query. This will then have the systems that these users are using and you can deploy your task sequence to. Select SMS_R_SYSTEM.ResourceID,SMS_R_SYSTEM.ResourceType,SMS_R_SYSTEM.Name,SMS_R_SYSTEM.SMSUniqueIdentifier,SMS_R_SYSTEM.ResourceDomainORWorkgroup,SMS_R_SYSTEM.Client, SMS_R_User.UniqueUserName FROM SMS_R_System JOIN SMS_UserMachineRelationship ON SMS_R_System.Name=SMS_UserMachineRelationship.MachineResourceName JOIN SMS_R_User ON SMS_UserMachineRelationship.UniqueUserName=SMS_R_User.UniqueUserName Where SMS_R_User.UniqueUserName in (select UniqueUserName from SMS_R_User where UserGroupName = "Domain\\UserGroup") -

How about this: msiexec /i "%~dp0OutlookAddinSetup.msi" /qn /norestart /log "C:\Program Files\XXX\logging\FusionTest2.log" msiexec /x {205CFDD2-34CF-4141-93AA-55FCDB7BB012} /q regedit.exe /s %~dp0settings.reg have all of the files in one folder including the batchfile that executes it. To test have it on a share and UNC to it and run the batch file. If it works create a package/application from it. The program command line will be the name of the batch file that executes the installs!

- 10 replies

-

- script

- cmd command

-

(and 2 more)

Tagged with:

-

Probably the easiest way to do it is create a collection, but if you can't do this just simply attach your uninstall package to a custom Task Sequence and create a WMI query so that it only runs on the 2 PCs in question within the collection of 50 PCs! With what your trying to achieve would probably be better done with applications as opposed to packages. With apps you have detection methods so if the required app does not detect itself on a system (in the event a user has uninstalled it) then it should install again.

-

-

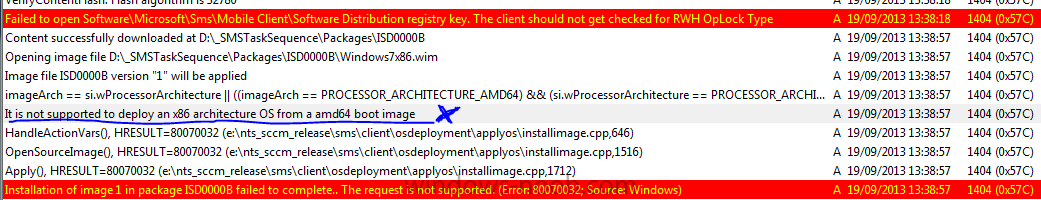

Have you attached an x86 boot.wim to the task sequence?

-

Create a detection rule using the user variable

Rocket Man replied to cravion's topic in Configuration Manager 2012

Hi VertigoRay Had the task of getting DropBox packaged today for use via the App Catalog. Once again Windows-noob has been the resource used for this. The above script had to be edited slightly for the installation to be successful. The script that worked for me was: Set oFSO = CreateObject("Scripting.FileSystemObject") Set oShell = WScript.CreateObject("WScript.Shell") If oFSO.FolderExists(oShell.ExpandEnvironmentStrings("%AppData%\Dropbox\bin")) Then WScript.StdOut.Write "Dropbox is Installed" WScript.Quit(0) End If If the part below is left in the application fails to start once requested. Else WScript.StdErr.Write "Dropbox is NOT Installed" WScript.Quit(0) The edited script still detects the application and the installation is also successful due to a proper detection method specified!! So cheers for the detection script...otherwise I'd still be banging my head against the wall..- 7 replies

-

- detection method

- sccm variables

- (and 3 more)

-

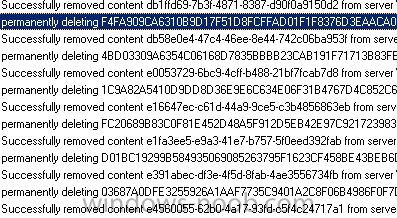

No......and yes.....if you have scheduled update distribution then if there are no files in the data source then obviously when the scheduled update takes place then it is going to update the target with no files!! If the files were been removed from the content share then you could have checked the distmgr log to see evidence that SCCM was removing them. But considering it is from the data source, then Im afraid I have no other thoughts on the matter other than check the security of the data source share to make sure that user group everyone has no access to it or at the most just read access so they are unable to make changes if this is indeed the cause of your problem. It is just a suggestion to rule out any unsolicited manual alteration to the source files and is good practice. Maybe you have these security settings in place already...

- 9 replies

-

- sccm

- auto delete

-

(and 2 more)

Tagged with:

-

So to get this straight...you have a common share for all software and this is where you have specified the data source of each package/app to be which is on an E:\ drive, and this is where the content is been removed from? If you have content validation or scheduled content distribution/update on a regular basis then no this is not OK..... even if the content only needs to be distributed once I feel that the data source is still very much needed and important for the package/app for continuous successful deployments. Have you distributed the packages and specified them to distribute to a content share which should be SMSPKGE$ or SMSPKGC$ depending on the drive targeted when the DP was configured/implemented. If so is the content still inside the SMSPKGE$ or SMSPKGC$, SITECODEXXXXX?

- 9 replies

-

- sccm

- auto delete

-

(and 2 more)

Tagged with:

-

That is a strange thing to be happenning. Normally this would have to be triggered manually via SCCM (remove package from DP). Are you the only person that has access to the server as admin? Is the content still in the sources folder but not in the DP share or is it not in both locations?

- 9 replies

-

- sccm

- auto delete

-

(and 2 more)

Tagged with:

-

BSOD During Windows 7 OSD ndis.sys 0x000000D1

Rocket Man replied to senseless's topic in Configuration Manager 2007

It is better to capture your image from a VM not any physical machine as an image captured from a VM can do any model as no driver contamination has occurred. Then package your drivers for each model and attach them with WMI query to suit. It would'nt be a huge task for you to capture a new image on a VM and import/distribute again, as you have created the driver package now. It is obviously the image that you captured from the notebook that is the problem. From my own experiences sysprep does not strip down drivers, as I remember when using WDS I had some custom images which were fully polished off with drivers etc... and once sysprep'd, captured and deployed again out to the model it was captured from all drivers were fine, so I feel that sysprep does not strip back drivers and your image is the problem as there are drivers in it that are not compatible with your other notebooks. -

Hi everyone I am trying to provide some offsite assistance to a problem with a remote site trying to capture a custom built image. I was sent the original log file. Sifting through it there is alot of errors relating to not enough disk space 0x80070070. The share that it was trying to capture to had 25GB free, so I recommended trying a different share even though 25GB should have been enough for this image as it does not have a vast amount of software that could cause this. Once a different share was specified that had ample free space the image captured successfully but it is unusually large, 40+ GB!!!??!! I cannot understand how this is so, and not been physically onsite adds to the problem. I have seen another error in the original log with the error SHEmptyRecycleBin failed, hr=8000ffff and Emptying Recycle bin failed, hr=8000ffff which translates to catastrophic failure. Could this suggest that there was a large amount of files left in the reycycle bin prior to capturing the image, thus the reason as to why the final wim image is so large or is this a normal log entry that can be dis-regarded and something else is causing this unusually large wim image. Thanks

-

SCCM 2012 Deploy Windows 7 SP1 Remote Sites

Rocket Man replied to oloughran's topic in Configuration Manager 2012

Is there any server infrastructure at remote sites? I would consider implementing DPs. Again this depends on finances/resources for server infrastructure.You can throttle DPs for bandwidth usage etc...Some sites I have are connected via 7mbps connections. These sites may only have 50-60 PCs. But imaging works fine considering there are local DPs and any content like ADRs are distributed at non-business hours, thus having no affect on the link at business time. The only other traffic that crosses these links are AD replication and some outlook client users connecting to the exchange and also the SCCM client communication back to a central MP, which again could be throttled down to a much less frequent time than that of the default 60mins in the client settings. A DP can also be a windows7 machine....but with this method I think you have no PXE functionality, but you could deploy OS via staged method(onto the live OS) and atleast then the content associated with the task sequence would be local and no need to run around with discs on the individual machines. -

SCCM 2012 Deploy Windows 7 SP1 Remote Sites

Rocket Man replied to oloughran's topic in Configuration Manager 2012

By remote sites you mean sites with Distribution points? If so and you are having trouble distributing the image (not deploying) then you may want to look at prestaged content media as an option for the slow WAN links to get your packages across. -

BSOD During Windows 7 OSD ndis.sys 0x000000D1

Rocket Man replied to senseless's topic in Configuration Manager 2007

And have any drivers been added to SCCM since then? As you are using a pool of drivers then SCCM may install any driver that is suitable, and if drivers have been added recently then maybe it's a newer version of driver that is causing the problems and not an undated driver! When you move to CM2012 your better off creating driver packages for your notebooks/systems as there is less that can go wrong and only the drivers in the package will install and not any other one(s) that may be comaptible/suitable.