-

Posts

71 -

Joined

-

Last visited

-

Days Won

6

Everything posted by Ocelaris

-

Remote SUP for Internet Clients on SCCM 2012

Ocelaris replied to lord_hydrax's question in Software Update Point

You know, it's been so long since I worked on this (3 jobs ago), my recollection was that we specified an internet facing name and published it on our external dns. It worked quite well, but that company has since upgraded sccm, so I don't even have that for a reference. Sorry! -

We have been on CM 2012 SP1 CU3 for probably about a year but we've just recently moved the server patching to CM. I've noticed that Configuration Manager does not have updates prior to 6/2013... However the WSUS console does have those updates. i.e. WSUS console shows MS11-041 which our audit says we need, but if I look at wsyncmgr.log it says "superseded" but tracking down the supersedence leads me nowhere. I imagine there MUST be at least 1 update from 2011 or 2012 that is not superseded, but I do not see any in our Configmanager console prior to 6/2013. Is there a way to get ALL prior updates into configuration manager? So we have an update MS11-041 which probably has been superseded (KB2525694) but since it no longer shows in the CM console I can't trace backwards... Is there anyway to trace from an old update to a new one? If I just change my "mark updates expired after 400 months" will I get older updates to show up in the CM console?

-

Unexpected Server Restart outside Maintenance Window

Ocelaris replied to dverbern's topic in Configuration Manager 2012

We're looking at the same thing. The recommendation from the article that Peter33 pointed out says to turn "Configure automatic updates" to disabled... which should not impact your CM updates (untested). Secondly, look at your maintenance windows, if you have no maintenance window the update will be applied at the deadline, and that often happens in the middle of the day. i.e. you must have a regularly occurring maintenance window or your outstanding deployments will apply at the wrong time. Also make sure in your maintenance window you don't have "apply only to task sequences". Hope that helps -

Auto Deployment Rule download failed 0X87D20417

Ocelaris replied to dverbern's topic in Configuration Manager 2012

Another way you can test your proxy settings "as the computer" account is to open a command prompt (as admin), and run "psexec -i -s -d cmd" which will open a new command prompt "as the computer" then launch Internet explorer from wherever it resides in c:\program files\etc... and try to open that web page from microsoft that it's having trouble with. i.e. http://wsus.ds.download.microsoft.com----.exe and see if you can open the file. that will rule out any proxy issues (I'm assuming that this wsus download process is running as the computer account). -

Auto Deployment Rule download failed 0X87D20417

Ocelaris replied to dverbern's topic in Configuration Manager 2012

Found this little blurb: http://social.technet.microsoft.com/Forums/en-US/5a9596d3-6f0b-4907-a788-efc06601a88a/there-was-an-error-downloading-the-software-update-12002?forum=configmanagersecurity from the post: 12002 = "timeout" and it seems like it revolves around proxy. Can you either bypass the proxy temporarily or open it up to your Computer account temporarily? Maybe check the IE Proxy settings on the SCCM Server? -

Auto Deployment Rule download failed 0X87D20417

Ocelaris replied to dverbern's topic in Configuration Manager 2012

I had a few sync/downloads fail because of a license (EULA) file not found... basically the synchcronizations were working but then the clients were failing because Microsoft never had the license.txt file. The solution was to grab it from another WSUS serve which previously had it; or to do a full wsusutil /reset which retries to download everything. I bet if you look in the clients that are failing they'll tell you exactly which file is missing. i.e. "trying to reach http://server/1/a/393930403-3039303-39303039/EULA.txt but cannot find it" etc... and then you go to your directory on your WSUS and see if it indeed is there (it's not). but the WSUSUtil.exe tools under c:\program files\update services\ is what I believe Microsoft and I used to reset the record of what had been downloaded or not to our WSUS server. -

You should test that theory by adding "F8" command prompt to your boot image. So hit F8 and do an ipconfig and see if you can ping 4.2.2.2 etc... Go into your boot image's options and there is a check box for adding "F8 or command prompt testing" etc... very useful.

-

It looks like your device is not found in the SCCM database, i.e. it's booting to an advertisement for unknown devices. i.e.: 70:5A:B6:B0:69:08, DBDE1CAF-ECFA-11DE-81D0-B9F76E39E8E2: device is not in the database. "70:5A:B6:B0:69:08, DBDE1CAF-ECFA-11DE-81D0-B9F76E39E8E2: found optional advertisement TOL20086" Looking for bootImage TOL00002 So it is looking for your boot image TOL00002, is that the boot image you intended for your task sequence? TOL0002 sounds like the default x64 or x86 boot image, not sure if you have a custom one, but mine is like ###00033 or similar, i.e. I created it much later so it's incremented higher. I'd just make sure that the boot image is correct for your task sequence, drivers etc... I never boot to unknown devices because I worry about people reimaging accidentally etc... but maybe that's the way you do it...

-

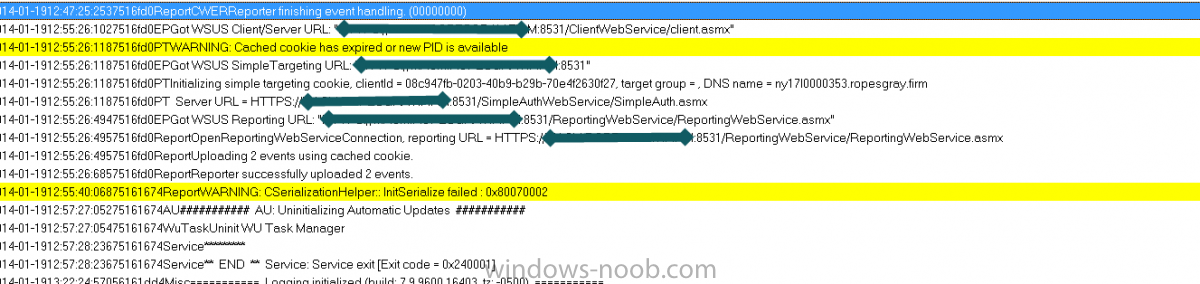

If you look at the client log, windowsupdate.log found in c:\windows\ does it say that it's reaching out to YOUR WSUS server or the internet. It should say it's reaching out to your server like this pic, if it's not reporting that "SERVER URL = YOUR SERVER:8530/8531" then the client hasn't been made aware you have a new WSUS Server. I think it might have been a mistake to reuse the same computer name as it's now confusing whether the client is trying to hit the new or old machine. Did you remove the computer account from the domain and create a new account or just reuse? Follow the path of the client from the windowsupdate.log to your IIS logs, make sure the client is reaching out correctly. You say it's having trouble with the ADR subscriptions, if your sync is working properly then you should look whether the clients have an issue reaching out to your server. You can test connectivingy to http://yoursite:8530/simplewebservice/simpleauth.asmx or one of those links in the windowsupdate.log. You may have some pre-requisites missing in your IIS installation, I'd go back and check more of the authentication options, i.e. windows authentication etc... But you should see errors in the IIS logs of the WSUS server if your clients can't access the source for the files, i.e. if you're having an authentication issue etc...

-

Auto Deployment Rule download failed 0X87D20417

Ocelaris replied to dverbern's topic in Configuration Manager 2012

What does your wsyncmgr.log say? Are you failing to do synchronizations completely? there are a few more WSUS logs to check out like WSUSCTL.log (connection to WSUS Server) and WCM.log. Open up the wsus console (not the SCCM console) and see if your synchronizations are failing or just your ADR rules... look at the site that it's trying to connect to and test running IE as the service account using PSEXEC. If it's the computer account do psexec -i -s -d cmd which will give you a command prompt as the computer account in which you can launch IE and test the proxy connection. -

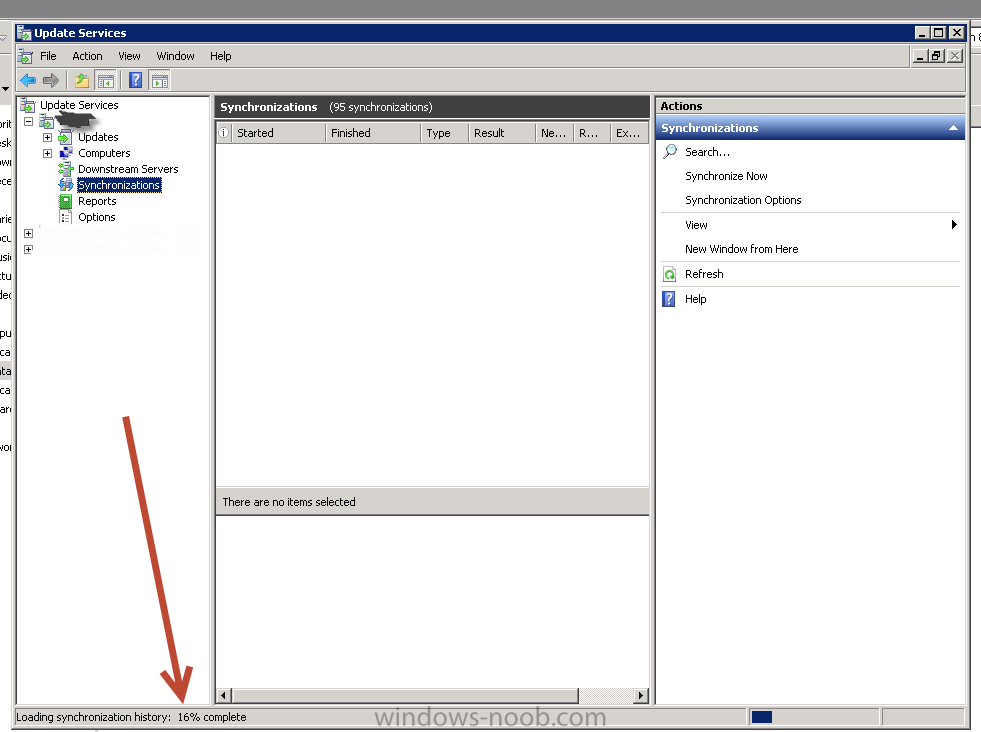

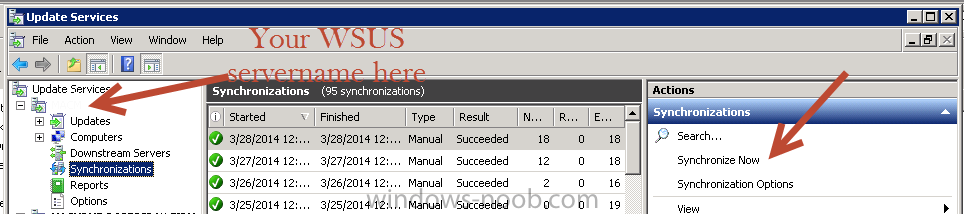

So you have WSUS installed on a separate box? Try opening the WSUS console (not SCCM) on your SCCM server and see if you can connect, look at the syncs if you can. The first point of business is to see whether your WSUS server is getting updates from the internet. Where is your SQL, your Computer account on the SCCM server should probably be admin on that and the SQL box, as well as SA or at least owner of the database. Is this a local database, in that case to check it out you have to install sql management studio locally and turn on the ability to connect to the box remotely so you can look at the database permissions. Not sure if your clients are connecting to your WSUS server, but typically you look at the IIS logs on that server to tell if they are able to connect, i.e. no 404 errors etc... that's c:\inetpub\logs\w3svc something something Also make sure your SCCM server knows to use 8530 assuming your WSUS is set up to do 8530 (which it thinks it is). First step, open wsus console on SCCM and make sure you can connect. Second step check/open up permissions on WSUS/SQL to your SCCM server's Computer account (and your admin account for testing). If you're not getting good synchs, try doing "synchronize now". Also try the WSUSUTIL /reset type commands found on your WSUS server under c:\program files\update services\yada yada Hope that helps, have gone round and round with WSUS a few times.

-

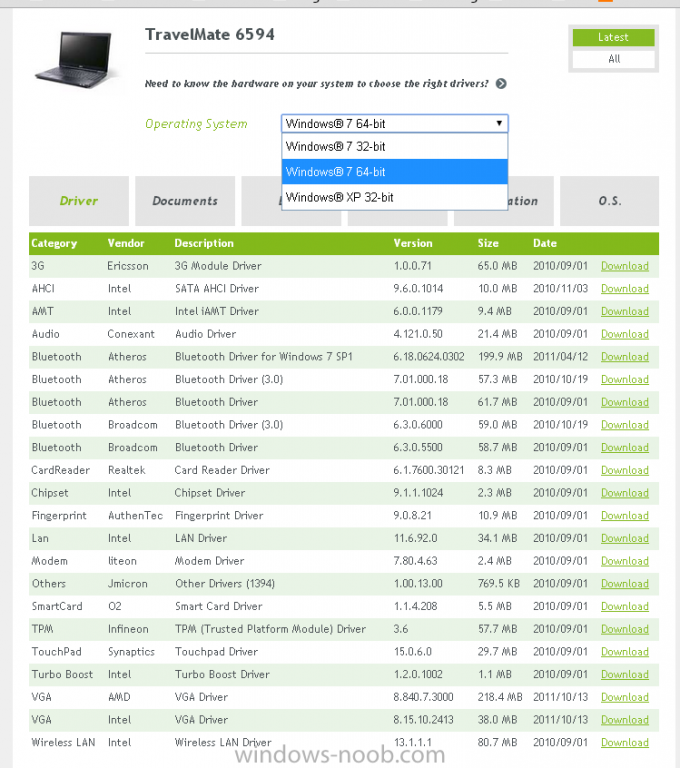

I forget which ADK I'm using, but the only installation is c:\program files (x86)\Windows Kits\8.0 so I am 95% sure I have the win 8.0 ADK. And I have no problems deploying win x64 8.1. I'm suprised you aren't injecting any drivers; that's always been my issue. I would say make sure you have the "F8" option turned on and do a number of checks during your imaging, first make sure you have network connectivity i.e. from the F8 command prompt do pings. Also do diskpart and confirm that the c:\ drive is being written properly. At a minimum you should make a driver package IMHO and apply that before installing your CM client. It's typically the chipset or some inane driver that crashes the TS when I see a "memory can't be written" kind of thing. Are you using a 64 bit boot disk? Are you rebooting after applying the wim "to operating system" i.e. not to the boot disk? Can you post your full SMSTS.log (preferrably an attachment not inline? Also I would personally pull out your domain before posting. Also your model is fairly old, it might be a matter of compatibility straight up... i.e. see below, they don't offer win 8 or 8.1 drivers. I had a lot of problems with drivers moving to 8.1 in a few cases. You can probably get the latest Chipset and AHCI drivers from the manufacturers directly though, 90% of the win7 drivers may work, but you should definetly go through and make sure when you import them they have "win 8.1" capable checked off in the "applicability" section.

-

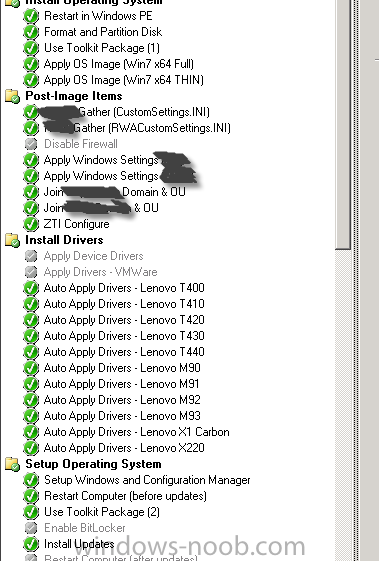

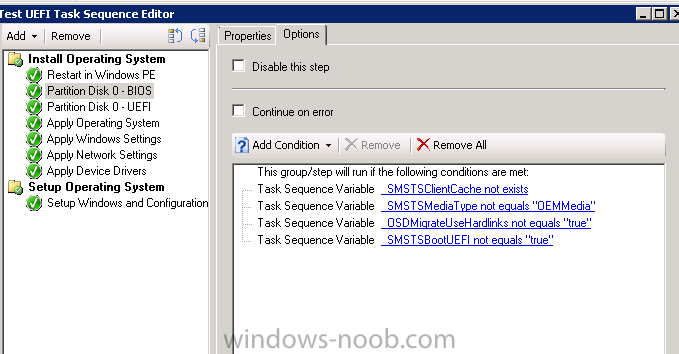

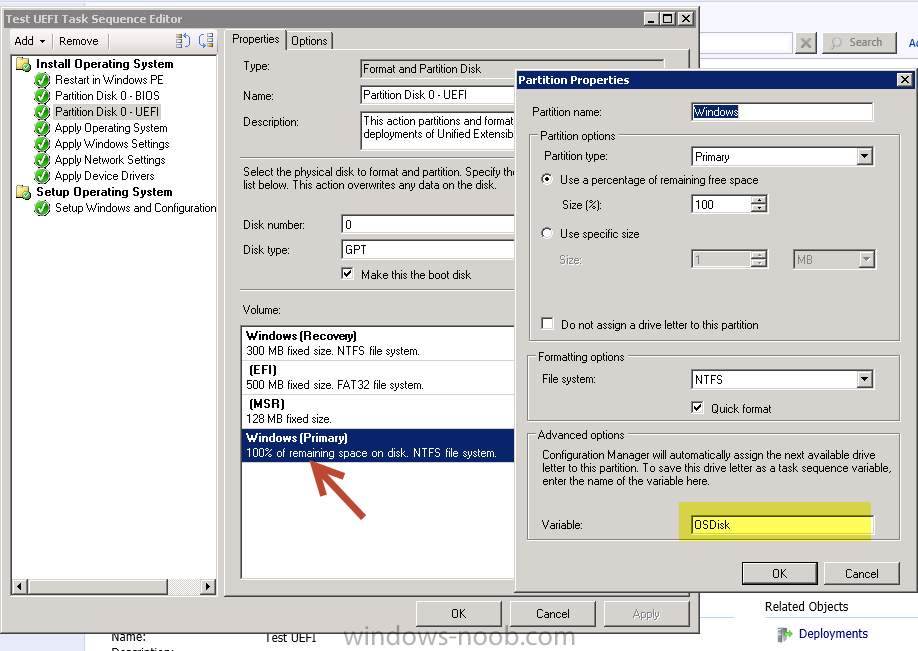

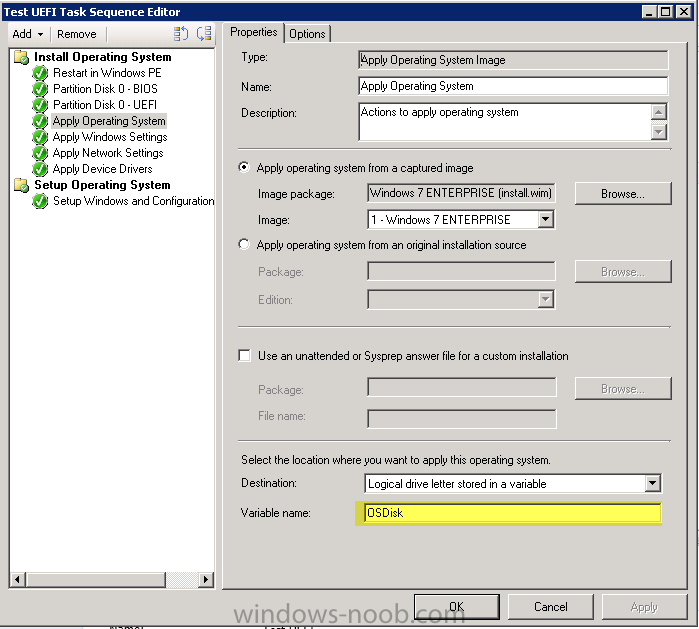

I've been working to get UEFI working in our environment for the past few weeks and thought I'd share some information; this is on Lenovo hardware, but it's probably applicable across most brands. With some of the newer Lenovo laptops/desktops coming with Windows 8 out of the box, a lot of them come with UEFI turned on and have some really great boot times because of this. So I packaged up these new models for our standard windows 7 64 bit image and then went to work on getting them to work with UEFI and I thought I'd just share some notes. If you haven't checked out Niall's howto already, it's a good place to start. http://www.windows-noob.com/forums/index.php?/topic/6250-how-can-i-deploy-windows-8-in-uefi-mode-using-configuration-manager-2012/ UEFI requires a GPT partition, but not all machines are fully compatible with UEFI, so you have to determine if your machine can do UEFI. When you create a generic task sequence (like Niall's walk through), it gives you a nice conditional steps which will check built in OSD task sequence variables _smstsbootUEFI which will tell you if you've booted using UEFI. I found it to be only partially reliable so I ended up doing WMI queries based on model #s, i.e. if model = new then partition in the GPT format. if Model = old partition in MBR format. Another key is that when you lay down your WIM, you should assign it to install to a varible "OSDISK" and you need to make sure to tag your main windows partition in the partitioning as "OSDISK" as well. This should be done in both the BIOS and UEFI partitioning; then you only have to point 1 WIM to either type of image. The same WIM works on UEFI or BIOS, it doesn't matter. Another problem is how to get your machines which support UEFI into UEFI mode. Lenovo gives vbscripts which will allow you to turn various bios settings on/off, some discovery on your part is required; as they are WMI queries, and vary from model to model. Lenovo Bios Scripts: http://support.lenovo.com/en_US/detail.page?LegacyDocID=MIGR-68488 The last important piece is that not all hardware components are fully UEFI compliant; by this I mean specifically Lenovo video drivers are not fully UEFI compliant in windows 7. They work 100% in windows 8, but in windows 7 you MUST use CSM or Compatability Support Module. Basically you're not going to get the ultra fast boot times because the video card bios isn't fully supported in windows 7. You have to go to windows 8 to unlock the ultra fast reboot. Lenovo support article explaining that windows 7 is not fully compatible with the intel video. https://forums.lenovo.com/t5/T400-T500-and-newer-T-series/Unable-to-Install-Windows-7-without-CSM-support-enabled-in-BIOS/ta-p/1038089 Summary: UEFI is great, but a lot of hardware isn't fully compliant under windows 7 so the benefit just isn't there yet.

-

Why not use a restricted groups, group policy instead of scripting it? Users can delete/add users to groups easily where as a restricted groups policy will keep enforcing over time.

-

You're missing some drivers, the message isn't very clear, but even ones which you might not think are relevant like AMT need to be present. I've gotten strange errors like this before and it has always been missing drivers. Go back and make sure to separate your 32 from 64 bit drivers and make sure to apply them to their own categories etc... double down on your drivers IMHO.

-

I can switch back and forth between UEFI and legacy bios no problem with my standard 64 bit boot image which is running CM 2012 SP1 CU2 (or 3 not sure off hand). I don't think you're having a UEFI issue you're having a PXE issue. Do you have a 64 bit boot disk? UEFI only works with 64 bit. Post 50 some lines of the logs of the smspxe.log file when you're trying to boot. But basically you shouldn't have any trouble pxe booting, the problem comes when you start laying down drivers and GPT disks etc...

-

SMSTS.log indicate Access is Denied for VBS script

Ocelaris replied to Config_Mgr_noob's topic in Configuration Manager 2012

IMHO I think you're using bad form running a command from a remote directory. The correct permissions that the directory would need to read is not your Network Access Account but EVERY COMPUTER ACCOUNT accessing this share. Basically, don't. Make a package and run file.vbs instead of \\really long directory structure\file.vbs. Have SCCM manage this package instead of relying on opening up shares. Make a package called "scripts" and make that part of every deployment etc... The problem is that your VBScript is calling out to a remote location and it is running under the computer context which doesn't have access to that directory. Fix your VBScript to not call out to remote directories. The other thing that it could be is that you're deliberately doing a "program" that does \\server\script.vbs also a no no. You can, but it's bad practice because you run into these situations where people don't grasp how everything comes together and things break. My guess is that the script is probably executing when the computer is not on the domain etc... or doesn't have access... Treat all your apps as "local" they're executing out of c:\windows\ccmcache\###### folder, you should include everything in your package that you need. If you must have your vbscript call out to remote locations you would have to open up share permissions to all computer accounts, i.e. "authenticated users" in a domain, not just "domain users" because it's running under the system context. Applications are run as the system account of a local machine, unless it's run in the user's credentials as an optional task sequence; Try running the vbscript in the "computer" context to get a valid representation of whether your script will run in SCCM by opening a PSEXEC window "as the computer account" by running "psexec -i -s -d cmd" and then run "cscript script.vbs" and you will see your errors. These are just conjectures because your problem isn't described fully/correctly, but 3 points to remember: 1) all scripts need to be run in "relative" path, i.e. don't do calls to random SMB shares, it rarely works 2) They typically run in the computer account's system context, this is the share permission/ntfs permissions you would have to grant if you really want to reach out to SMB shares 3) NEVER check the box in the package "Data Access", "Copy the contents in this package share on distribtution points". Also never check "run from remote distribution point"... You're not doing these, but wanted to point out that if you do check that box, it copies it to the SMSPKGD$ share and makes it an SMB share so you could "run from remote distribution point" but then you have 2 copies (wasting space) and it often fails to run from a DP. My personal preference this one... There are ways to run scripts "as another account", but then you have to make sure the account has admin rights on that box, and network share permissions. IMHO, it's bad form to try and run it that way. It's more reliable to run it as the system account on that machine, and run with all files you need to reference in your package; reaching out to shares is not reliable. -

Typically you're going to create auto deployment rules that target a collection, and you will choose how frequently and what updates get pushed to which clients and then let that do all the work. Make sure to choose "Add to existing software update group" otherwise it'll create many many update groups. You are going to make multiple collections based on what they need. So for newly installed clients you would put a "windows update" step in the task sequence when building out, otherwise it'll get the updates eventually. You have to play around with how many updates get put into a task sequence as sometimes it needs lots of reboots between updates. For "test" pushes, typically you have an IT test machine collection or similar and you would make an automatic deployment rule targeted at that collection just earlier than other machines, so the entire company would get updates a week or two after the rule runs for the IT test group Your normal "all patches" can go out to whatever "main" collection you want, just make an auto deploy rule to target that.

-

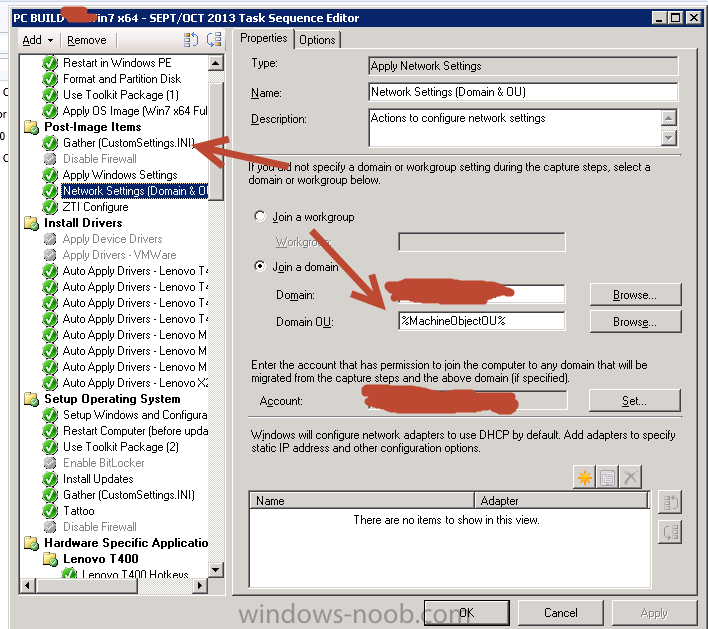

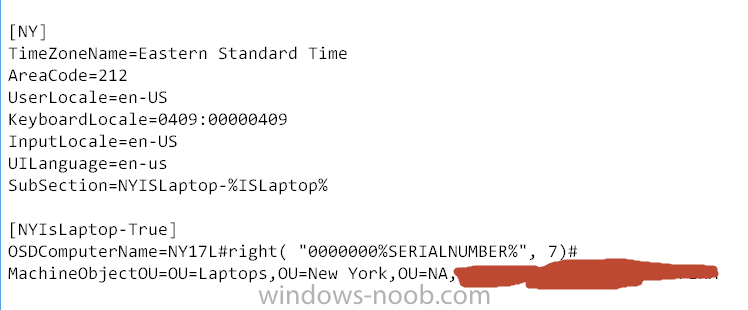

Auto-assign computername in OSD Deployment with VB Script

Ocelaris replied to Bever87's topic in Configuration Manager 2012

Why go through the custom VBScript route? There is a good method built into MDT for naming machines and adding it to an OU structure. Create a customsettings.ini package from MDT, and use this naming convention. I imagine there is a walk through, we have had this in our environment for a long time and it works quite well. -

So my main site is in Massachusetts (USA), and we opened an office in Seoul, Korea. I'm getting all sorts of distribution point errors, so I figured it was a problem with the installation and uninstalled/reinstalled and now I'm in worse shape than when I started. We have 10 sites and all are working generally pretty good except this one. Other remote sites in Asia (Tokyo, Hong Kong, Shanghai) seem to be working fine. Latency from Tokyo to Massachusetts is 185ms Latency from Seoul to Massachussetts is 218ms WMI tests using WBEMTEST fail to connect from Massachussets to Seoul about half the time. They work from Tokyo to Seoul every time. Are there any settings to accomodate higher latency connections? Should I be putting a management point in Asia? The primary site server is in MA, so I'm not sure a second MP in Asia would even be responsible for pushing the DP installation and packages... I can set a Pull site in Asia, but at this point I can't even get the DP reinstalled. Thoughts?

-

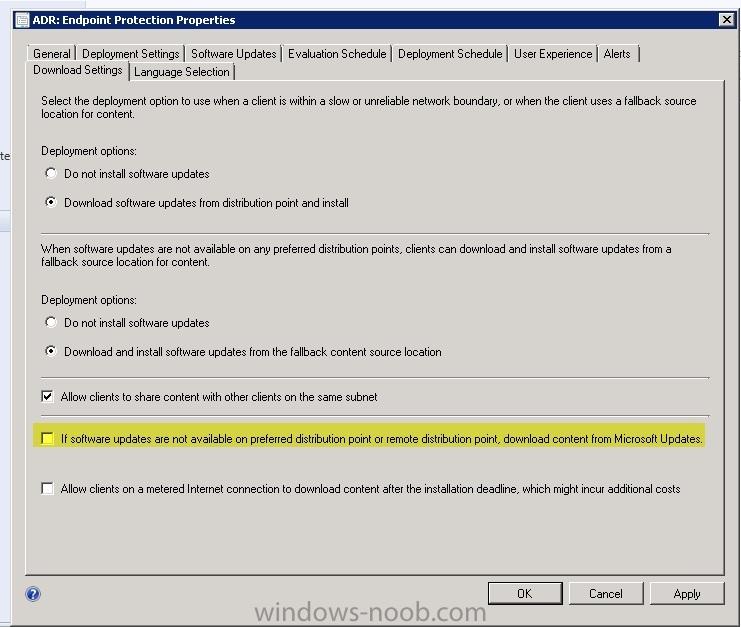

My window and highlight is from "Automatic Deployment Rules", but it looks like your window is from just a regular "package". I don't think you are in the same window... go to Software Updates/ Automatic Deployment Rules and right click on your ADR rule, that's how I got to that screen.

-

Remote SUP for Internet Clients on SCCM 2012

Ocelaris replied to lord_hydrax's question in Software Update Point

So had a marathon 4 hour call with Microsoft today, but got it working. It was probably some misunderstanding of SSL and certs and IIS/WSUS configuration that was the issue. First issue, the secondary site didn't have SSL configured for WSUS, despite having the "ports" configured through the WSUS console and the CM console, it wasn't configured properly. You can check HKLM\Software\Microsoft\Update Services\Server\Setup for "UsingSSL" if it is = 1 or 0... you do a "c:\program files\update services\wsusutil.exe configuressl" once you get it set up right. So to configrue SSL check this thread, basically all the virtual directories have to be configured properly. http://technet.microsoft.com/en-us/library/bb633246.aspx Also, our internal MP couldn't talk SSL to the DMZ MP (WSUS over 8531) because we had a 3rd party Cert which had our External DMZ hostname, not our internal. i.e. domain.com vs. domain.firm. To test we launched the WSUS console on the INternal MP and tried to "connect to another server" entered the DMZ server port 8531 and it could not connect. So we ditched the 3rd party cert and used my DP Web server certificate and included in the DNS field External.domain.com and Internal.company.firm domains. Basically our 3rd party cert would only take 1 Domain, but my internal Root CA is flexible, and that takes away our ability to use mobile devices and macs because our certificates are Windows AD distributed... lots of logs, I can go into more detail while it's fresh if it interests. Also my "AD Discovery" made ip address ranges, works great! I do have the "AD Sites" but you can delete those, but the forest discovery finds "subnets" but really makes ip address range boundaries based on AD sites and services. -

In your ADR rules, do you let it it download from Microsoft Update? I would make sure your rules specify "don't use Microsoft Update"... I have an IBCM that I'm working on getting my client machines which roam between internet and intranet to get their updates from the IBCM. They can do all their regular CM stuff over 443 just fine, they find the MP if you look in LocationServices.log on the client, do they find the IBCM? My clients out on the internet absolutely do not pull from Microsoft Update, look in your c:\windows\windowsupdate.log you can see I uninstalled my 2007 client, and in the interim before the 2012 client was installed it got in it's head that it was going to go out to MS... But then you can see it says "update not allowed to download" This should be possible unless I'm misunderstanding you. When I get my IBCM figured out I'll let you know, I'm having trouble getting the client to swap over from my intranet SUP point to the IBCM, even though software installs work fine. 2013-08-13 16:24:28:670 816 fe4 DnldMgr Regulation server path: https://update.microsoft.com/v6/UpdateRegulationService/UpdateRegulation.asmx. 2013-08-13 16:24:28:858 816 fe4 DnldMgr *********** DnldMgr: New download job [updateId = {BF12E0F2-6E07-4A7A-99CD-401D7623E886}.201] *********** 2013-08-13 16:24:28:858 816 fe4 DnldMgr Regulation: {9482F4B4-E343-43B6-B170-9A65BC822C77} - Update BF12E0F2-6E07-4A7A-99CD-401D7623E886 is "Priority" regulated and can NOT download. Sequence 8829 vs AcceptRate 2074. 2013-08-13 16:24:28:858 816 fe4 DnldMgr * Update is not allowed to download due to regulation.

-

Remote SUP for Internet Clients on SCCM 2012

Ocelaris replied to lord_hydrax's question in Software Update Point

be careful with CU1, SP2 should be out soon (later this summer, early fall) according to MS techs I've talked to. We did CU1 because we were about ready to roll out, but I don't think it necessarily fixed anything, but definetly broke the client reconcilliation inbox CCR records were piling up. Did you find a reason to not do the AD discovery for boundaries? I started making all sorts of boundaries, but then just said the heck with it and just did AD Discovery, and it seemed to do a pretty good job. The client finds the ICBM fine, and can do software installs through bits etc... (443) but the SUP point doesn't roll over when client discovery does for some reason. If you look in c:\windows\windowsupdate.log it will tell you where it pulls from. and despite it finding a Management point on the internet, it doesn't switch over to the DMZ SUP. -

Remote SUP for Internet Clients on SCCM 2012

Ocelaris replied to lord_hydrax's question in Software Update Point

Sorry for the long delay, but the shared DB worked great, SYNC works well, but I'm now struggling to get clients to swap over to the ICBM for SUS updates... I'll let you know when I get that working. I did 2012 SP1 and just did CU1, and started having some random issues with ccr records, had to add some random SQL code to get it to work, MS had to help on that one.