Search the Community

Showing results for tags 'dell'.

-

I have a OSD Task Sequence for Windows 11 22H2 being deployed to new Dell Optiplex 7000's. The Dell's are going to sleep during the "Setup Windows and Configuration Manager" step of the Task sequence. I have checked the "Run as high performance power plan" box in the more options tab, but the PC sti...

- 2 replies

-

- dell

- power management

-

(and 2 more)

Tagged with:

-

Hi Everyone, I'm a new Level 2 Technician as i was previously Level 1 technician and my main role was helping users troubleshoot issues on their Computer and recently had a few colleagues from Level 3 started helping me get into SCCM environment were i flourishing from their Wisdom, so found you...Read more

-

Hello Everyone, I need an answer to simple question , I'm in a process to implement MDT into my organization , in the organization we have dell latitude laptops that comes with OEM license , From my understanding the OEM license is stored on the bios of the computers. (correct me if I'm wrong)...

-

I'm trying to install DCIS 4.2 on my computer with System Center Configuration Manager 2012 R2 console installed. The installer runs until it gets to "Importing Custom Dell Reboot Package" and then goes right into "Rolling Back Option". I've tried this on a system with the SCCM Console installed fre...

- 1 reply

-

- sccm 2012 r2

- dcis

-

(and 1 more)

Tagged with:

-

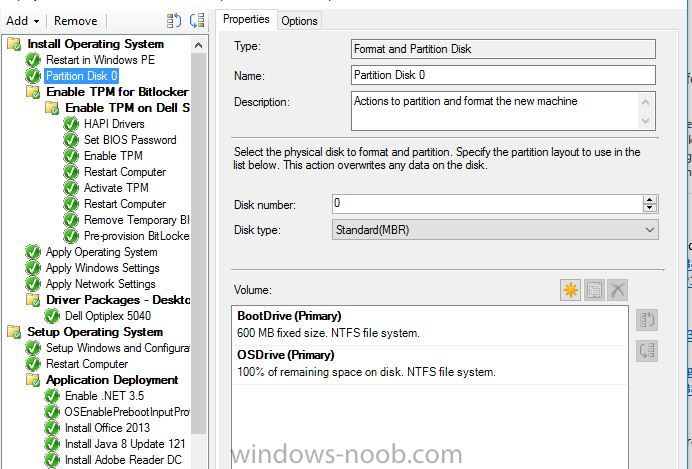

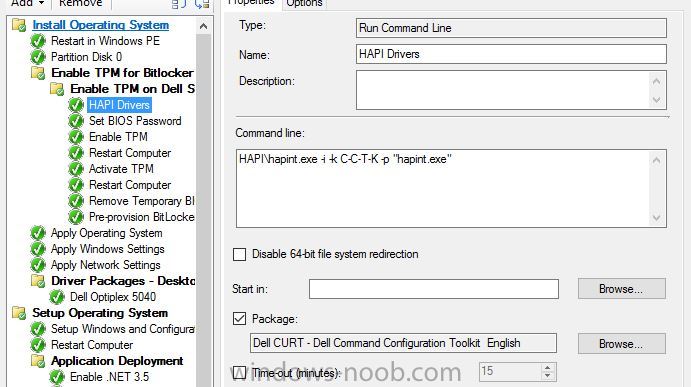

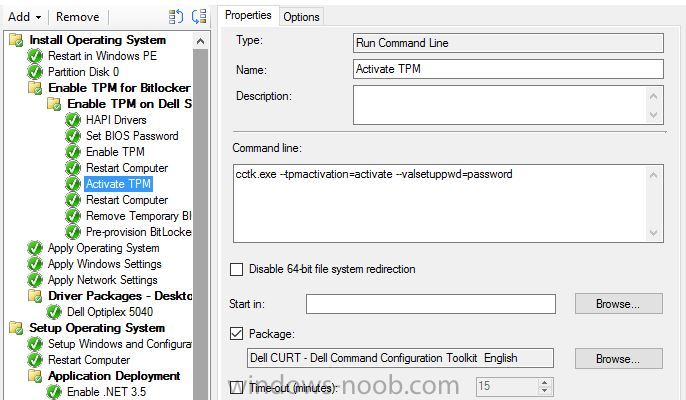

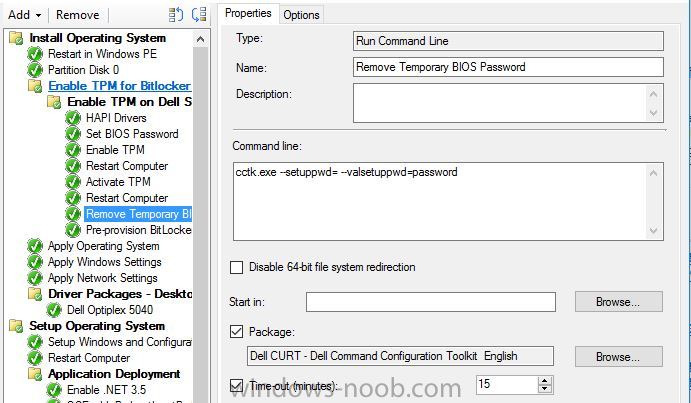

Hello, I've looked on many of forums and I am trying to find a way to enable bitlocker using a task sequence so I don't have to manually do every single laptop separately. I did download and created a package using the Dell CCTK and created a package using the Dell\X86_64 and include all the conte...

-

Hello, I'm having a problem enabling BitLocker on Windows 10 v1607 during the task sequence for one model laptop: Dell Latitude E5450 -- except that it does work about 10% of the time. I haven't been able to narrow it down to a specific hardware problem and different BIOS update versions and driver...

- 1 reply

-

- Windows 10

- TPM and BitLocker

-

(and 4 more)

Tagged with:

-

Hi We are planning a Windows 10 deployment for the not to distant future and I am working on getting a new set of task sequences setup for this, incorporating some of the niggly things we've wanted to do for a long time but haven't had the time or patience. One of those things is setting up...

- 3 replies

-

- SCCM

- Configuration Manager

-

(and 2 more)

Tagged with:

-

Hello, Just wondering if anyone has manage to image the Dell XPS 13 9350? Got SCCM 2012 R2 SP1 CU2 and when using my current TS wtih Dell XPS 13 9350 it came to the first reboot (partitioning, apply wim, check drivers, install client etc...) and then fail... acording to this link that is normal...

-

Published: 2013-06-06 (at www.testlabs.se/blog) Updated: - Version: 1.0 This post will focus on having the technical prerequisites ready and in place for a successful Domino/Notes migration. Before going into any details, if you are planning to do a migration from Domino and want to use Dell So...

-

NOTE: Cross posted to Technet Forums I have used the Dell Client Integration Pack to import warranty data into SCCM. I imported their Warranty report which gives me information on all Dell systems in the database. How can I go about limiting this report to return data only from a specific collectio...

-

Afternoon, Recently, I have noticed that some our machines are not consistantly joining the domain as part of the task sequence. I find the issue is very strange, for example the same laptop can take multiple attempts before finally going through and completing the task sequence. The issue occur...

-

I have a question for you guys. Have any of you guys used sccm to image the Dell 7440 Ultrabooks with 16gb of ram in them? We have two models here, an 8gb model and a 16gb model. The 8gb ones image perfectly fine, the 16's fail out in great fashion. The 16's even install windows minus components......

-

I've been looking into finding a way to pull the warranty info of our pc's into SCCM. I found the Dell Client Integration Pack but don't know much about it. Does it work as it is advertised? Any gotcha's I need to know about before installing it on our SCCM server? Any tips? Does it actually pu...

-

Software in reference image is gone after deployment

MeMyselfAndI posted a question in Deploying Operating Systems

Hi All, I've created a reference image (windows 7 x64) a few months ago. This reference image also contains a few applications like Office 2013, Silverlight... I've been using this reference image for a few months now. I've installed it on several types of Dell clients and everything works f...- 3 replies

-

- deployment

- reference image

-

(and 2 more)

Tagged with:

-

Hello! I have been having an issue trying to get the correct storage drivers for a Dell T7400. I have used the Dell WinPE driver packs and the drivers that are listed on the Dell website. Has anyone been able to successfully pxe deploy this model? Every driver I have tried so far does not enable...

-

- dell

- deployments

-

(and 4 more)

Tagged with:

-

Hello All, I'm trying to image a Dell Precision M6800. It PXE boots and pulls the boot wim fine but once it gets into the task sequence area it will just reboot. I can't seem to pull an IP address to it at all but it PXE boots fine. This is the only laptop that does this. Even the previous M6700...

-

This will be a collection of posts, regarding migrations in general in the first post will digging deeper in the following posts. Published: 2013-05-09 (on www.testlabs.se/blog) Updated: 2013-05-15 Version: 1.1 Thanks for the great input and feedback: Hakim Taoussi and Magnus Göransson P...

-

- Exchange

- Migrations

-

(and 3 more)

Tagged with:

-

Hello, I have a ~8 mb bootable iso that contains the Dell diagnostics tools for laptops. Is it possible to somehow use this iso to boot into the diagnostic tool from PXE using SCCM 2007?

-

I am attempting to import Dell E6510 CAB drivers into SCCM 2012 but everyone of them fails except for the ST Micro driver. Upon completing the import wizard the failure gives the following error: The selected file is not a valid Windows device driver. In the DriverCatalog.log on the SCCM se...

-

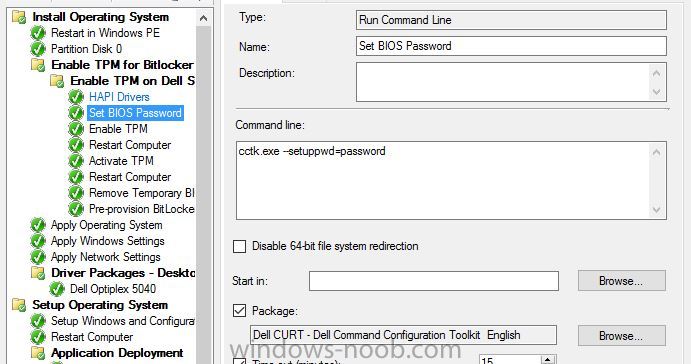

Does anyone know if there is a way to skip the Set BIOS password step in an OSD if there is already a password set. I want the task sequence to add the BIOS password if non-existent and skip it if it is. Thanks, Mike

-

Hello I'm having a bit of trouble deploying Win7 to an OptiPlex 790. I have added the NIC driver to WinPE. It boots into WinPE, starts the TS, applies the OS, applies the the OptiPlex 790 driver package(lates cab imported from Dell). On the next step after applying drivers, "Setup Windows and Co...

- 4 replies

-

- Dell

- OptiPlex 790

-

(and 2 more)

Tagged with:

-

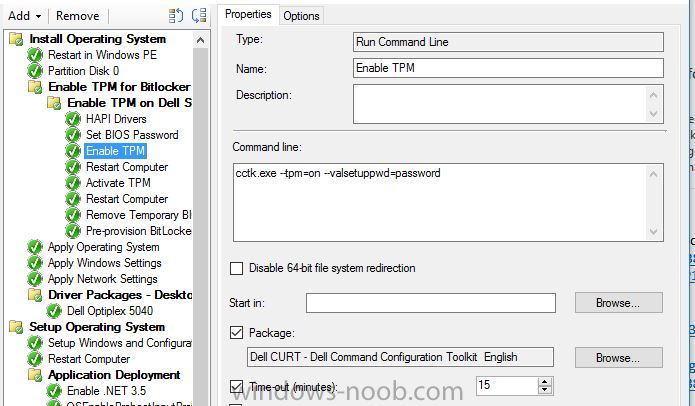

I have finally figured out the TS to set a BIOS password, enable and activate the TPM... However, the built in 'Enable Bitlocker' step fails, with no error codes, and no logs. It appears to successfully complete, but it doesn't. I think I may need a script to take ownership. All of the scripts...

-

I am trying to enable and activate the TPM chip on the Dell machine's we have. So far I have created the CCTK package, pushed it to my DP, etc, but it keeps failing at setting the BIOS password. I have been unable to get my task sequence to complete. Everything that I have read so far seems to lead...

- 3 replies

-

- ConfigMgr 2012

- Dell

-

(and 3 more)

Tagged with:

-

Can anyone give me a few tips on how to achieve this? I have an .exe file that I downloaded from DELL; I can use the -NOPAUSE parameter to pass to this file, to force a shutdown and to disable notifications. I created a package and I deployed it to a collection (as required) that queries all my...